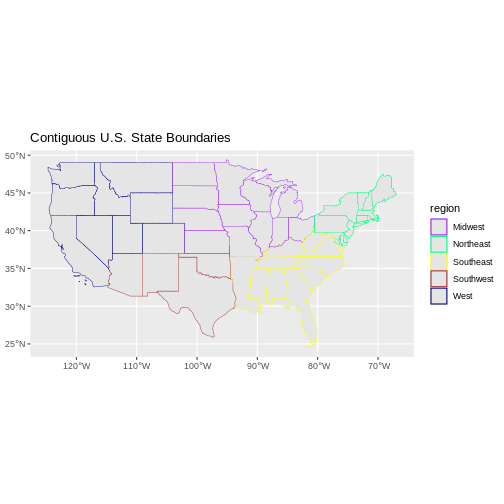

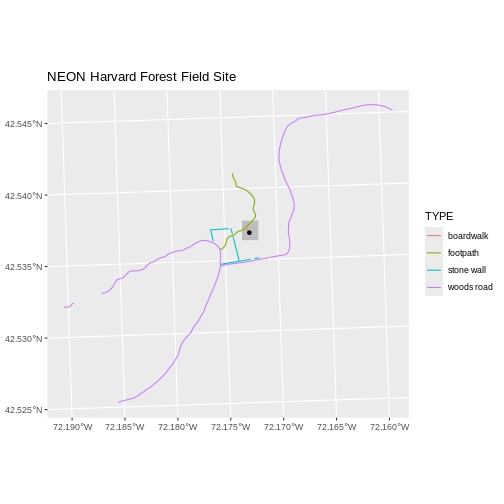

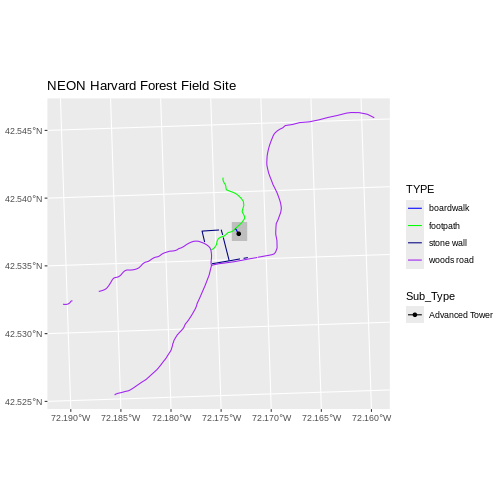

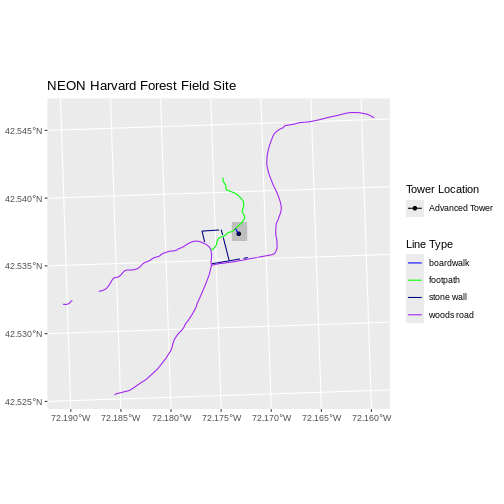

Content from Intro to Raster Data

Last updated on 2024-10-15 | Edit this page

Estimated time: 50 minutes

Overview

Questions

- What is a raster dataset?

- How do I work with and plot raster data in R?

- How can I handle missing or bad data values for a raster?

Objectives

- Describe the fundamental attributes of a raster dataset.

- Explore raster attributes and metadata using R.

- Import rasters into R using the

terrapackage. - Plot a raster file in R using the

ggplot2package. - Describe the difference between single- and multi-band rasters.

Things You’ll Need To Complete This Episode

See the lesson homepage for detailed information about the software, data, and other prerequisites you will need to work through the examples in this episode.

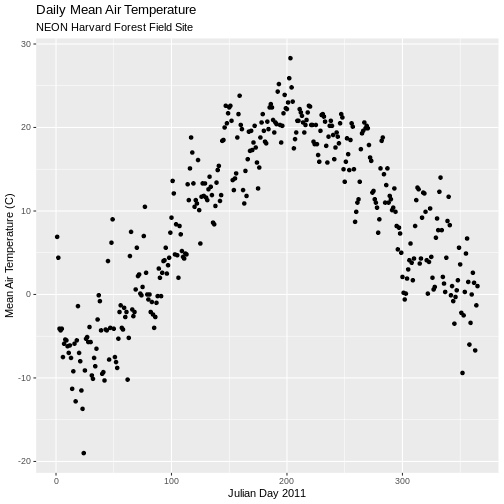

In this episode, we will introduce the fundamental principles, packages and metadata/raster attributes that are needed to work with raster data in R. We will discuss some of the core metadata elements that we need to understand to work with rasters in R, including CRS and resolution. We will also explore missing and bad data values as stored in a raster and how R handles these elements.

We will continue to work with the dplyr and

ggplot2 packages that were introduced in the Introduction to R

for Geospatial Data lesson. We will use two additional packages in

this episode to work with raster data - the terra and

sf packages. Make sure that you have these packages

loaded.

R

library(terra)

library(ggplot2)

library(dplyr)

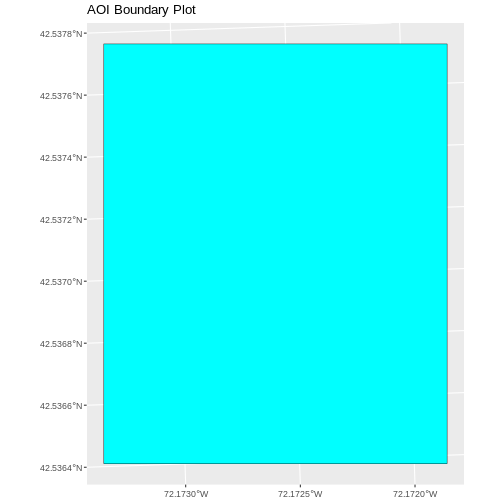

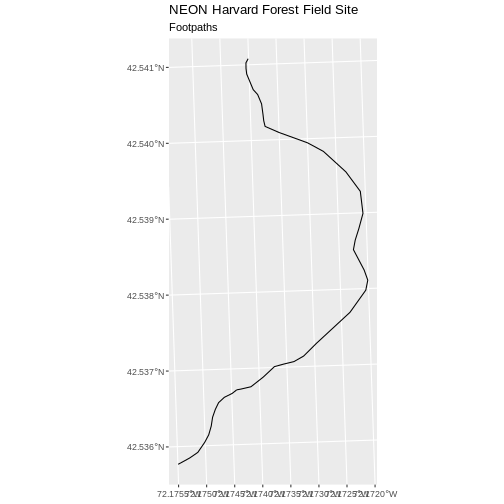

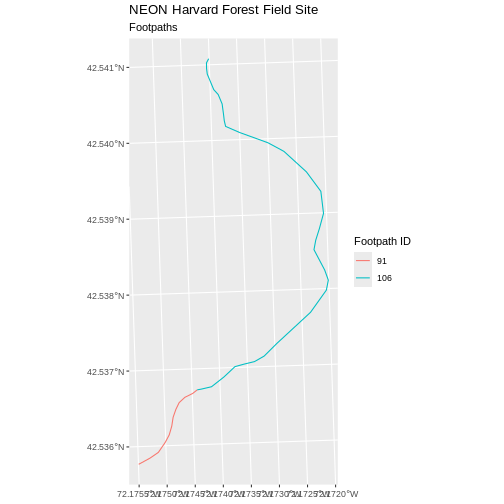

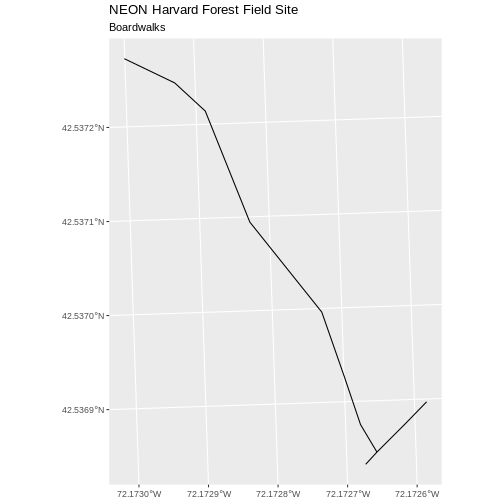

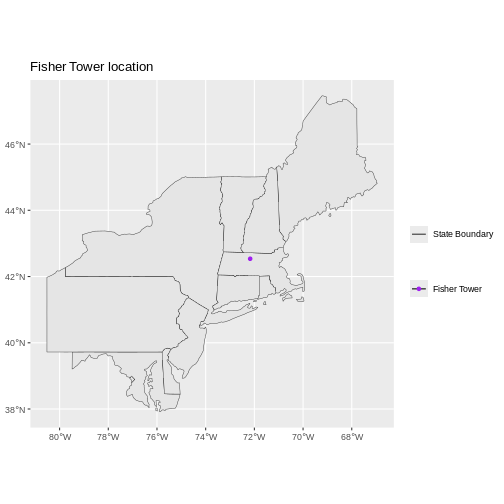

Introduce the Data

If not already discussed, introduce the datasets that will be used in this lesson. A brief introduction to the datasets can be found on the Geospatial workshop homepage.

For more detailed information about the datasets, check out the Geospatial workshop data page.

View Raster File Attributes

We will be working with a series of GeoTIFF files in this lesson. The

GeoTIFF format contains a set of embedded tags with metadata about the

raster data. We can use the function describe() to get

information about our raster data before we read that data into R. It is

ideal to do this before importing your data.

R

describe("data/NEON-DS-Airborne-Remote-Sensing/HARV/DSM/HARV_dsmCrop.tif")

OUTPUT

[1] "Driver: GTiff/GeoTIFF"

[2] "Files: data/NEON-DS-Airborne-Remote-Sensing/HARV/DSM/HARV_dsmCrop.tif"

[3] "Size is 1697, 1367"

[4] "Coordinate System is:"

[5] "PROJCRS[\"WGS 84 / UTM zone 18N\","

[6] " BASEGEOGCRS[\"WGS 84\","

[7] " DATUM[\"World Geodetic System 1984\","

[8] " ELLIPSOID[\"WGS 84\",6378137,298.257223563,"

[9] " LENGTHUNIT[\"metre\",1]]],"

[10] " PRIMEM[\"Greenwich\",0,"

[11] " ANGLEUNIT[\"degree\",0.0174532925199433]],"

[12] " ID[\"EPSG\",4326]],"

[13] " CONVERSION[\"UTM zone 18N\","

[14] " METHOD[\"Transverse Mercator\","

[15] " ID[\"EPSG\",9807]],"

[16] " PARAMETER[\"Latitude of natural origin\",0,"

[17] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[18] " ID[\"EPSG\",8801]],"

[19] " PARAMETER[\"Longitude of natural origin\",-75,"

[20] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[21] " ID[\"EPSG\",8802]],"

[22] " PARAMETER[\"Scale factor at natural origin\",0.9996,"

[23] " SCALEUNIT[\"unity\",1],"

[24] " ID[\"EPSG\",8805]],"

[25] " PARAMETER[\"False easting\",500000,"

[26] " LENGTHUNIT[\"metre\",1],"

[27] " ID[\"EPSG\",8806]],"

[28] " PARAMETER[\"False northing\",0,"

[29] " LENGTHUNIT[\"metre\",1],"

[30] " ID[\"EPSG\",8807]]],"

[31] " CS[Cartesian,2],"

[32] " AXIS[\"(E)\",east,"

[33] " ORDER[1],"

[34] " LENGTHUNIT[\"metre\",1]],"

[35] " AXIS[\"(N)\",north,"

[36] " ORDER[2],"

[37] " LENGTHUNIT[\"metre\",1]],"

[38] " USAGE["

[39] " SCOPE[\"Engineering survey, topographic mapping.\"],"

[40] " AREA[\"Between 78°W and 72°W, northern hemisphere between equator and 84°N, onshore and offshore. Bahamas. Canada - Nunavut; Ontario; Quebec. Colombia. Cuba. Ecuador. Greenland. Haiti. Jamica. Panama. Turks and Caicos Islands. United States (USA). Venezuela.\"],"

[41] " BBOX[0,-78,84,-72]],"

[42] " ID[\"EPSG\",32618]]"

[43] "Data axis to CRS axis mapping: 1,2"

[44] "Origin = (731453.000000000000000,4713838.000000000000000)"

[45] "Pixel Size = (1.000000000000000,-1.000000000000000)"

[46] "Metadata:"

[47] " AREA_OR_POINT=Area"

[48] "Image Structure Metadata:"

[49] " COMPRESSION=LZW"

[50] " INTERLEAVE=BAND"

[51] "Corner Coordinates:"

[52] "Upper Left ( 731453.000, 4713838.000) ( 72d10'52.71\"W, 42d32'32.18\"N)"

[53] "Lower Left ( 731453.000, 4712471.000) ( 72d10'54.71\"W, 42d31'47.92\"N)"

[54] "Upper Right ( 733150.000, 4713838.000) ( 72d 9'38.40\"W, 42d32'30.35\"N)"

[55] "Lower Right ( 733150.000, 4712471.000) ( 72d 9'40.41\"W, 42d31'46.08\"N)"

[56] "Center ( 732301.500, 4713154.500) ( 72d10'16.56\"W, 42d32' 9.13\"N)"

[57] "Band 1 Block=1697x1 Type=Float64, ColorInterp=Gray"

[58] " Min=305.070 Max=416.070 "

[59] " Minimum=305.070, Maximum=416.070, Mean=359.853, StdDev=17.832"

[60] " NoData Value=-9999"

[61] " Metadata:"

[62] " STATISTICS_MAXIMUM=416.06997680664"

[63] " STATISTICS_MEAN=359.85311802914"

[64] " STATISTICS_MINIMUM=305.07000732422"

[65] " STATISTICS_STDDEV=17.83169335933" If you wish to store this information in R, you can do the following:

R

HARV_dsmCrop_info <- capture.output(

describe("data/NEON-DS-Airborne-Remote-Sensing/HARV/DSM/HARV_dsmCrop.tif")

)

Each line of text that was printed to the console is now stored as an

element of the character vector HARV_dsmCrop_info. We will

be exploring this data throughout this episode. By the end of this

episode, you will be able to explain and understand the output

above.

Open a Raster in R

Now that we’ve previewed the metadata for our GeoTIFF, let’s import

this raster dataset into R and explore its metadata more closely. We can

use the rast() function to open a raster in R.

Data Tip - Object names

To improve code readability, file and object names should be used

that make it clear what is in the file. The data for this episode were

collected from Harvard Forest so we’ll use a naming convention of

datatype_HARV.

First we will load our raster file into R and view the data structure.

R

DSM_HARV <-

rast("data/NEON-DS-Airborne-Remote-Sensing/HARV/DSM/HARV_dsmCrop.tif")

DSM_HARV

OUTPUT

class : SpatRaster

dimensions : 1367, 1697, 1 (nrow, ncol, nlyr)

resolution : 1, 1 (x, y)

extent : 731453, 733150, 4712471, 4713838 (xmin, xmax, ymin, ymax)

coord. ref. : WGS 84 / UTM zone 18N (EPSG:32618)

source : HARV_dsmCrop.tif

name : HARV_dsmCrop

min value : 305.07

max value : 416.07 The information above includes a report of min and max values, but no other data range statistics. Similar to other R data structures like vectors and data frame columns, descriptive statistics for raster data can be retrieved like

R

summary(DSM_HARV)

WARNING

Warning: [summary] used a sampleOUTPUT

HARV_dsmCrop

Min. :305.6

1st Qu.:345.6

Median :359.6

Mean :359.8

3rd Qu.:374.3

Max. :414.7 but note the warning - unless you force R to calculate these

statistics using every cell in the raster, it will take a random sample

of 100,000 cells and calculate from that instead. To force calculation

all the values, you can use the function values:

R

summary(values(DSM_HARV))

OUTPUT

HARV_dsmCrop

Min. :305.1

1st Qu.:345.6

Median :359.7

Mean :359.9

3rd Qu.:374.3

Max. :416.1 To visualise this data in R using ggplot2, we need to

convert it to a dataframe. We learned about dataframes in an

earlier lesson. The terra package has an built-in

function for conversion to a plotable dataframe.

R

DSM_HARV_df <- as.data.frame(DSM_HARV, xy = TRUE)

Now when we view the structure of our data, we will see a standard dataframe format.

R

str(DSM_HARV_df)

OUTPUT

'data.frame': 2319799 obs. of 3 variables:

$ x : num 731454 731454 731456 731456 731458 ...

$ y : num 4713838 4713838 4713838 4713838 4713838 ...

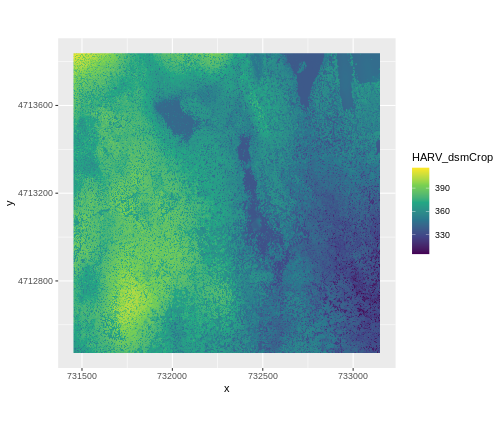

$ HARV_dsmCrop: num 409 408 407 407 409 ...We can use ggplot() to plot this data. We will set the

color scale to scale_fill_viridis_c which is a

color-blindness friendly color scale. We will also use the

coord_quickmap() function to use an approximate Mercator

projection for our plots. This approximation is suitable for small areas

that are not too close to the poles. Other coordinate systems are

available in ggplot2 if needed, you can learn about them at their help

page ?coord_map.

R

ggplot() +

geom_raster(data = DSM_HARV_df , aes(x = x, y = y, fill = HARV_dsmCrop)) +

scale_fill_viridis_c() +

coord_quickmap()

Plotting Tip

More information about the Viridis palette used above at R Viridis package documentation.

Plotting Tip

For faster, simpler plots, you can use the plot function

from the terra package.

See ?plot for more arguments to customize the plot

R

plot(DSM_HARV)

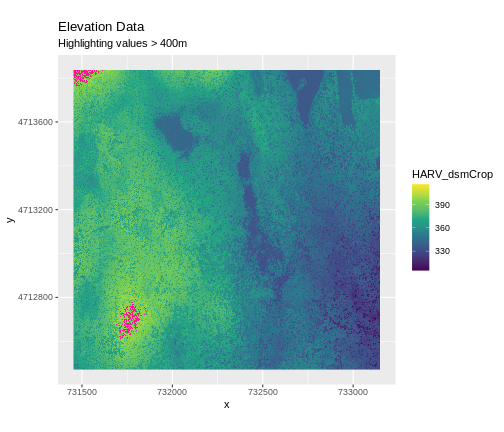

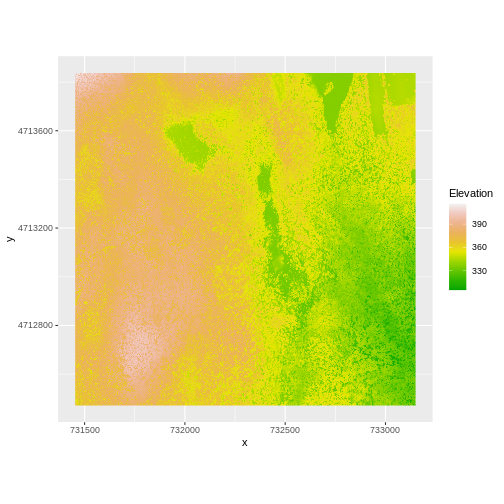

This map shows the elevation of our study site in Harvard Forest. From the legend, we can see that the maximum elevation is ~400, but we can’t tell whether this is 400 feet or 400 meters because the legend doesn’t show us the units. We can look at the metadata of our object to see what the units are. Much of the metadata that we’re interested in is part of the CRS. We introduced the concept of a CRS in an earlier lesson.

Now we will see how features of the CRS appear in our data file and what meanings they have.

View Raster Coordinate Reference System (CRS) in R

We can view the CRS string associated with our R object using

thecrs() function.

R

crs(DSM_HARV, proj = TRUE)

OUTPUT

[1] "+proj=utm +zone=18 +datum=WGS84 +units=m +no_defs"Challenge

What units are our data in?

+units=m tells us that our data is in meters.

Understanding CRS in Proj4 Format

The CRS for our data is given to us by R in proj4

format. Let’s break down the pieces of proj4 string. The

string contains all of the individual CRS elements that R or another GIS

might need. Each element is specified with a + sign,

similar to how a .csv file is delimited or broken up by a

,. After each + we see the CRS element being

defined. For example projection (proj=) and datum

(datum=).

UTM Proj4 String

A projection string (like the one of DSM_HARV) specifies

the UTM projection as follows:

+proj=utm +zone=18 +datum=WGS84 +units=m +no_defs +ellps=WGS84 +towgs84=0,0,0

- proj=utm: the projection is UTM, UTM has several zones.

- zone=18: the zone is 18

- datum=WGS84: the datum is WGS84 (the datum refers to the 0,0 reference for the coordinate system used in the projection)

- units=m: the units for the coordinates are in meters

- ellps=WGS84: the ellipsoid (how the earth’s roundness is calculated) for the data is WGS84

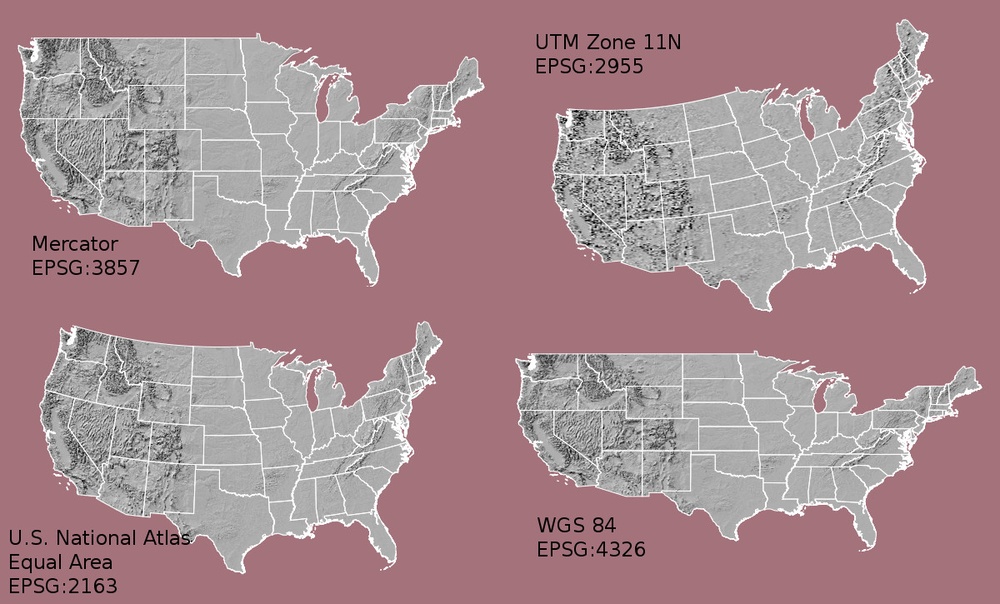

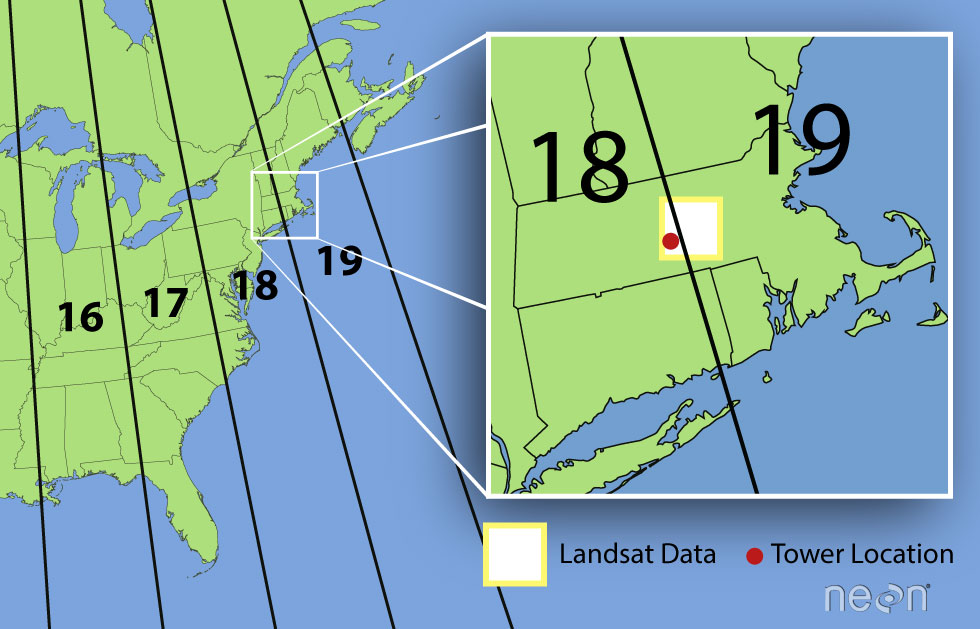

Note that the zone is unique to the UTM projection. Not all CRSs will have a zone. Image source: Chrismurf at English Wikipedia, via Wikimedia Commons (CC-BY).

Calculate Raster Min and Max Values

It is useful to know the minimum or maximum values of a raster dataset. In this case, given we are working with elevation data, these values represent the min/max elevation range at our site.

Raster statistics are often calculated and embedded in a GeoTIFF for us. We can view these values:

R

minmax(DSM_HARV)

OUTPUT

HARV_dsmCrop

min 305.07

max 416.07R

min(values(DSM_HARV))

OUTPUT

[1] 305.07R

max(values(DSM_HARV))

OUTPUT

[1] 416.07Data Tip - Set min and max values

If the minimum and maximum values haven’t already been calculated, we

can calculate them using the setMinMax() function.

R

DSM_HARV <- setMinMax(DSM_HARV)

We can see that the elevation at our site ranges from 305.0700073m to 416.0699768m.

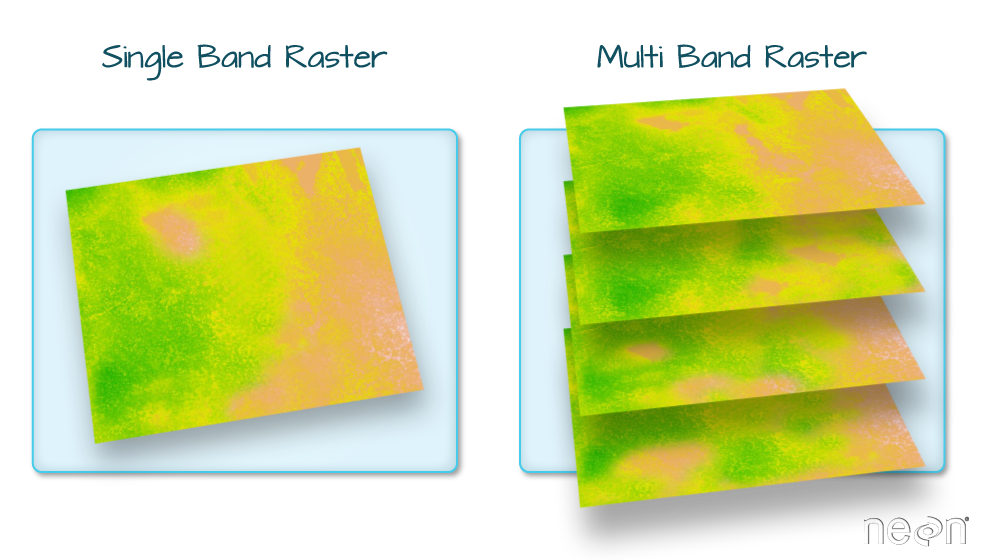

Raster Bands

The Digital Surface Model object (DSM_HARV) that we’ve

been working with is a single band raster. This means that there is only

one dataset stored in the raster: surface elevation in meters for one

time period.

A raster dataset can contain one or more bands. We can use the

rast() function to import one single band from a single or

multi-band raster. We can view the number of bands in a raster using the

nlyr() function.

R

nlyr(DSM_HARV)

OUTPUT

[1] 1However, raster data can also be multi-band, meaning that one raster file contains data for more than one variable or time period for each cell. Jump to a later episode in this series for information on working with multi-band rasters: Work with Multi-band Rasters in R.

Dealing with Missing Data

Raster data often has a NoDataValue associated with it.

This is a value assigned to pixels where data is missing or no data were

collected.

By default the shape of a raster is always rectangular. So if we have

a dataset that has a shape that isn’t rectangular, some pixels at the

edge of the raster will have NoDataValues. This often

happens when the data were collected by an airplane which only flew over

some part of a defined region.

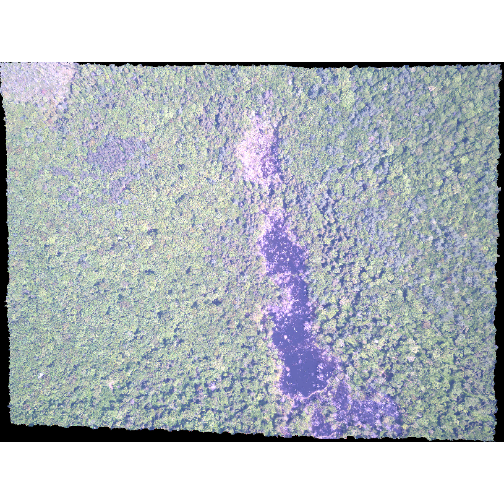

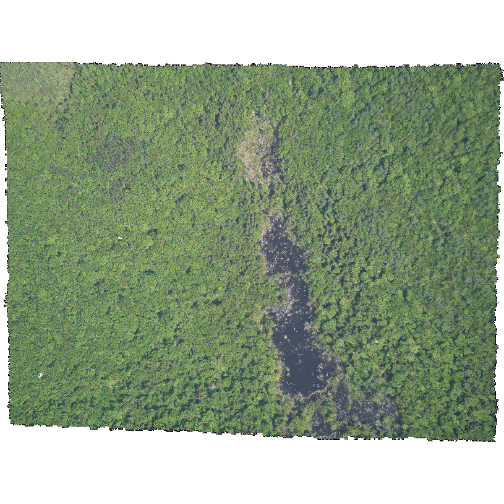

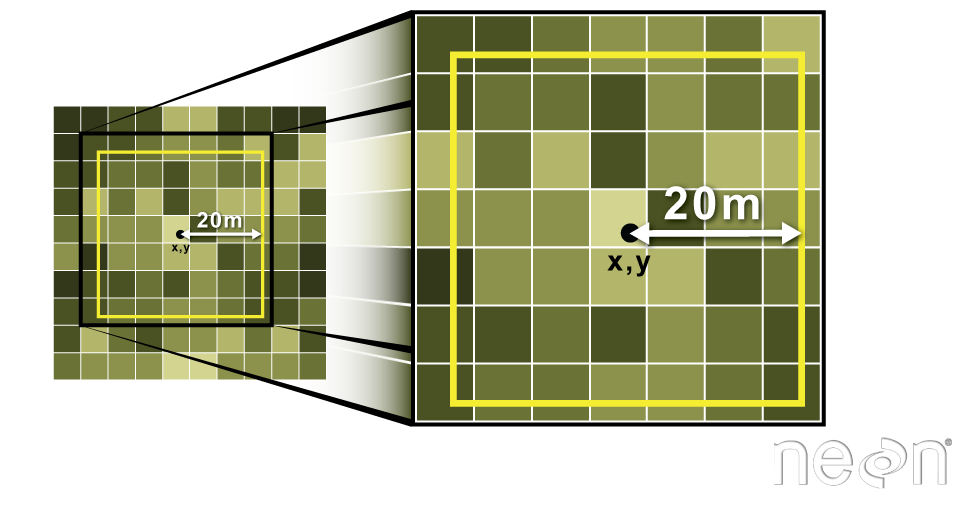

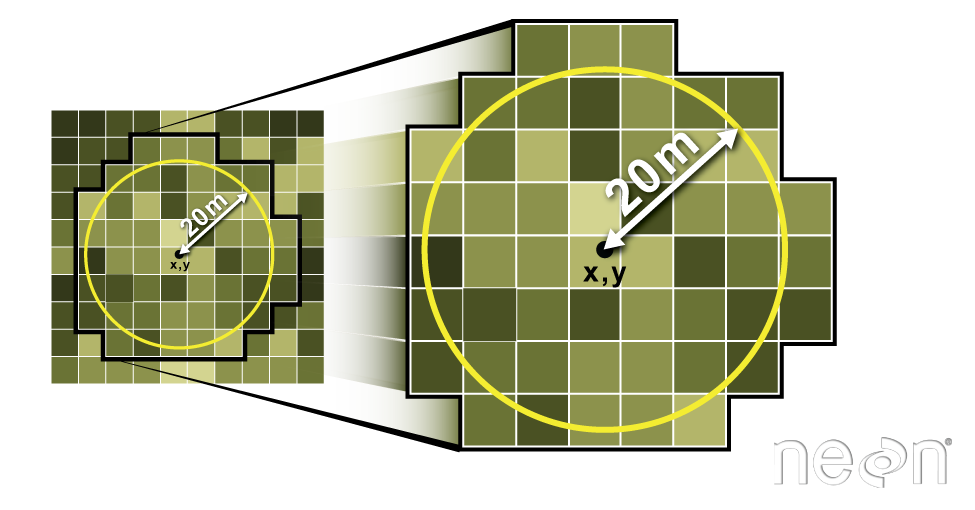

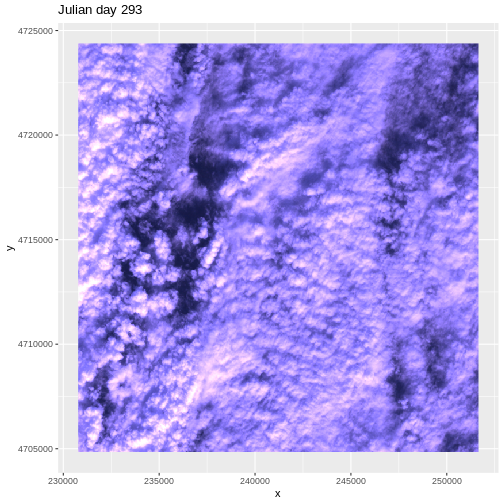

In the image below, the pixels that are black have

NoDataValues. The camera did not collect data in these

areas.

In the next image, the black edges have been assigned

NoDataValue. R doesn’t render pixels that contain a

specified NoDataValue. R assigns missing data with the

NoDataValue as NA.

The difference here shows up as ragged edges on the plot, rather than black spaces where there is no data.

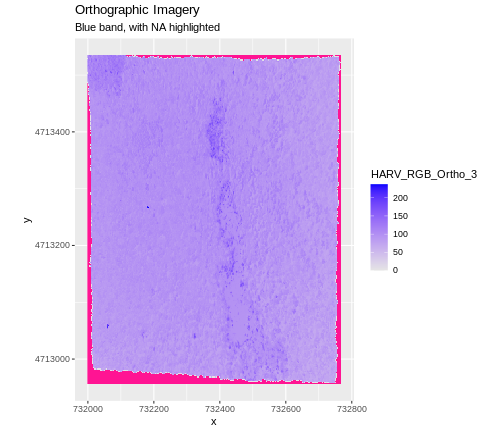

If your raster already has NA values set correctly but

you aren’t sure where they are, you can deliberately plot them in a

particular colour. This can be useful when checking a dataset’s

coverage. For instance, sometimes data can be missing where a sensor

could not ‘see’ its target data, and you may wish to locate that missing

data and fill it in.

To highlight NA values in ggplot, alter the

scale_fill_*() layer to contain a colour instruction for

NA values, like

scale_fill_viridis_c(na.value = 'deeppink')

The value that is conventionally used to take note of missing data

(the NoDataValue value) varies by the raster data type. For

floating-point rasters, the figure -3.4e+38 is a common

default, and for integers, -9999 is common. Some

disciplines have specific conventions that vary from these common

values.

In some cases, other NA values may be more appropriate.

An NA value should be a) outside the range of valid values,

and b) a value that fits the data type in use. For instance, if your

data ranges continuously from -20 to 100, 0 is not an acceptable

NA value! Or, for categories that number 1-15, 0 might be

fine for NA, but using -.000003 will force you to save the

GeoTIFF on disk as a floating point raster, resulting in a bigger

file.

If we are lucky, our GeoTIFF file has a tag that tells us what is the

NoDataValue. If we are less lucky, we can find that

information in the raster’s metadata. If a NoDataValue was

stored in the GeoTIFF tag, when R opens up the raster, it will assign

each instance of the value to NA. Values of NA

will be ignored by R as demonstrated above.

Challenge

Use the output from the describe() and

sources() functions to find out what

NoDataValue is used for our DSM_HARV

dataset.

R

describe(sources(DSM_HARV))

OUTPUT

[1] "Driver: GTiff/GeoTIFF"

[2] "Files: /home/runner/work/r-raster-vector-geospatial/r-raster-vector-geospatial/site/built/data/NEON-DS-Airborne-Remote-Sensing/HARV/DSM/HARV_dsmCrop.tif"

[3] "Size is 1697, 1367"

[4] "Coordinate System is:"

[5] "PROJCRS[\"WGS 84 / UTM zone 18N\","

[6] " BASEGEOGCRS[\"WGS 84\","

[7] " DATUM[\"World Geodetic System 1984\","

[8] " ELLIPSOID[\"WGS 84\",6378137,298.257223563,"

[9] " LENGTHUNIT[\"metre\",1]]],"

[10] " PRIMEM[\"Greenwich\",0,"

[11] " ANGLEUNIT[\"degree\",0.0174532925199433]],"

[12] " ID[\"EPSG\",4326]],"

[13] " CONVERSION[\"UTM zone 18N\","

[14] " METHOD[\"Transverse Mercator\","

[15] " ID[\"EPSG\",9807]],"

[16] " PARAMETER[\"Latitude of natural origin\",0,"

[17] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[18] " ID[\"EPSG\",8801]],"

[19] " PARAMETER[\"Longitude of natural origin\",-75,"

[20] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[21] " ID[\"EPSG\",8802]],"

[22] " PARAMETER[\"Scale factor at natural origin\",0.9996,"

[23] " SCALEUNIT[\"unity\",1],"

[24] " ID[\"EPSG\",8805]],"

[25] " PARAMETER[\"False easting\",500000,"

[26] " LENGTHUNIT[\"metre\",1],"

[27] " ID[\"EPSG\",8806]],"

[28] " PARAMETER[\"False northing\",0,"

[29] " LENGTHUNIT[\"metre\",1],"

[30] " ID[\"EPSG\",8807]]],"

[31] " CS[Cartesian,2],"

[32] " AXIS[\"(E)\",east,"

[33] " ORDER[1],"

[34] " LENGTHUNIT[\"metre\",1]],"

[35] " AXIS[\"(N)\",north,"

[36] " ORDER[2],"

[37] " LENGTHUNIT[\"metre\",1]],"

[38] " USAGE["

[39] " SCOPE[\"Engineering survey, topographic mapping.\"],"

[40] " AREA[\"Between 78°W and 72°W, northern hemisphere between equator and 84°N, onshore and offshore. Bahamas. Canada - Nunavut; Ontario; Quebec. Colombia. Cuba. Ecuador. Greenland. Haiti. Jamica. Panama. Turks and Caicos Islands. United States (USA). Venezuela.\"],"

[41] " BBOX[0,-78,84,-72]],"

[42] " ID[\"EPSG\",32618]]"

[43] "Data axis to CRS axis mapping: 1,2"

[44] "Origin = (731453.000000000000000,4713838.000000000000000)"

[45] "Pixel Size = (1.000000000000000,-1.000000000000000)"

[46] "Metadata:"

[47] " AREA_OR_POINT=Area"

[48] "Image Structure Metadata:"

[49] " COMPRESSION=LZW"

[50] " INTERLEAVE=BAND"

[51] "Corner Coordinates:"

[52] "Upper Left ( 731453.000, 4713838.000) ( 72d10'52.71\"W, 42d32'32.18\"N)"

[53] "Lower Left ( 731453.000, 4712471.000) ( 72d10'54.71\"W, 42d31'47.92\"N)"

[54] "Upper Right ( 733150.000, 4713838.000) ( 72d 9'38.40\"W, 42d32'30.35\"N)"

[55] "Lower Right ( 733150.000, 4712471.000) ( 72d 9'40.41\"W, 42d31'46.08\"N)"

[56] "Center ( 732301.500, 4713154.500) ( 72d10'16.56\"W, 42d32' 9.13\"N)"

[57] "Band 1 Block=1697x1 Type=Float64, ColorInterp=Gray"

[58] " Min=305.070 Max=416.070 "

[59] " Minimum=305.070, Maximum=416.070, Mean=359.853, StdDev=17.832"

[60] " NoData Value=-9999"

[61] " Metadata:"

[62] " STATISTICS_MAXIMUM=416.06997680664"

[63] " STATISTICS_MEAN=359.85311802914"

[64] " STATISTICS_MINIMUM=305.07000732422"

[65] " STATISTICS_STDDEV=17.83169335933" NoDataValue are encoded as -9999.

Bad Data Values in Rasters

Bad data values are different from NoDataValues. Bad

data values are values that fall outside of the applicable range of a

dataset.

Examples of Bad Data Values:

- The normalized difference vegetation index (NDVI), which is a measure of greenness, has a valid range of -1 to 1. Any value outside of that range would be considered a “bad” or miscalculated value.

- Reflectance data in an image will often range from 0-1 or 0-10,000 depending upon how the data are scaled. Thus a value greater than 1 or greater than 10,000 is likely caused by an error in either data collection or processing.

Find Bad Data Values

Sometimes a raster’s metadata will tell us the range of expected values for a raster. Values outside of this range are suspect and we need to consider that when we analyze the data. Sometimes, we need to use some common sense and scientific insight as we examine the data - just as we would for field data to identify questionable values.

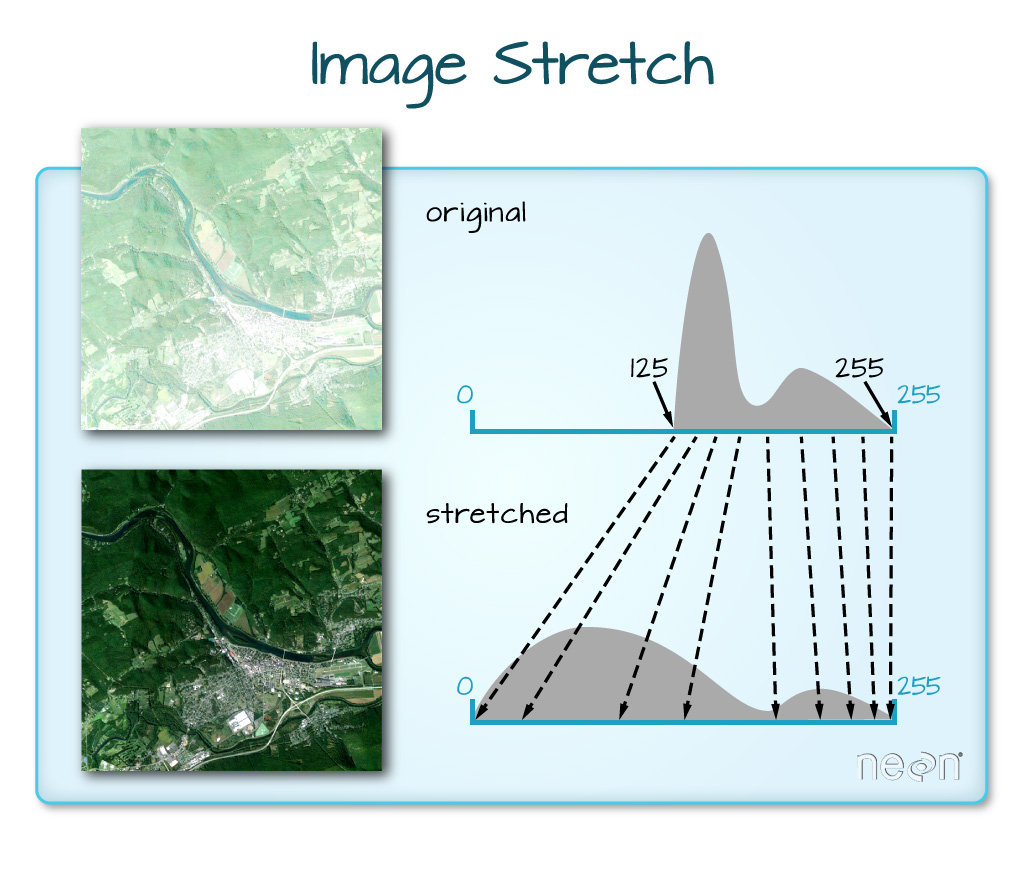

Plotting data with appropriate highlighting can help reveal patterns in bad values and may suggest a solution. Below, reclassification is used to highlight elevation values over 400m with a contrasting colour.

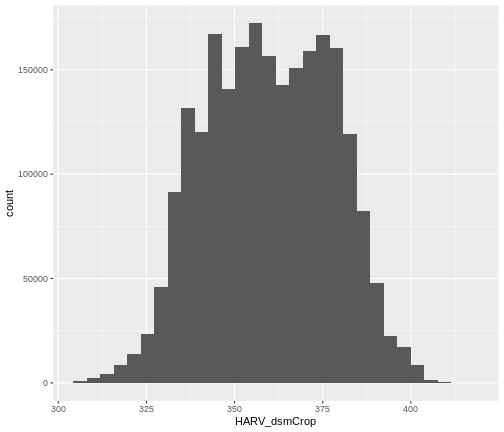

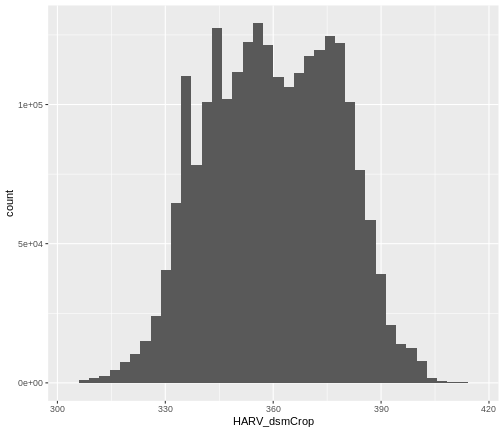

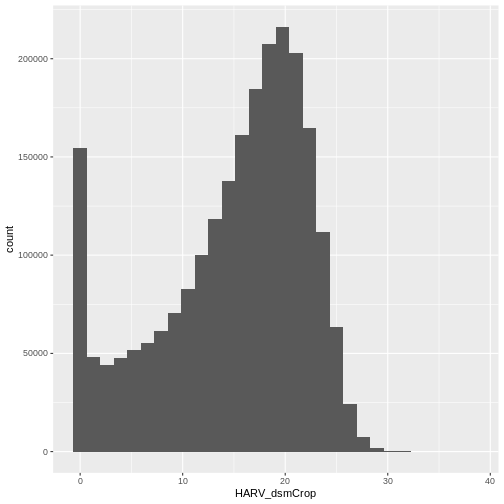

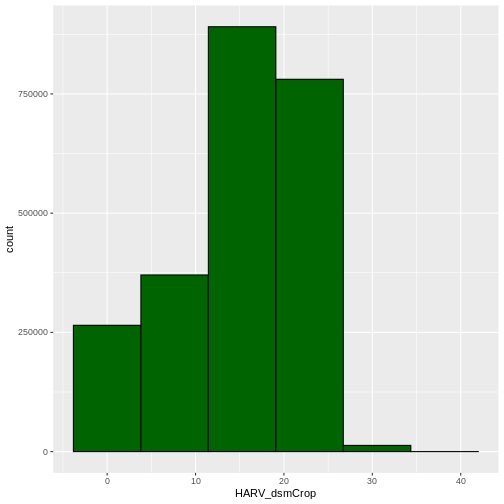

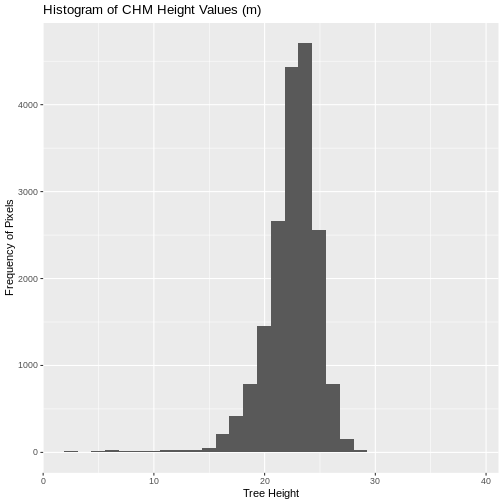

Create A Histogram of Raster Values

We can explore the distribution of values contained within our raster

using the geom_histogram() function which produces a

histogram. Histograms are often useful in identifying outliers and bad

data values in our raster data.

R

ggplot() +

geom_histogram(data = DSM_HARV_df, aes(HARV_dsmCrop))

OUTPUT

`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

Notice that a warning message is thrown when R creates the histogram.

stat_bin() using bins = 30. Pick better

value with binwidth.

This warning is caused by a default setting in

geom_histogram enforcing that there are 30 bins for the

data. We can define the number of bins we want in the histogram by using

the bins value in the geom_histogram()

function.

R

ggplot() +

geom_histogram(data = DSM_HARV_df, aes(HARV_dsmCrop), bins = 40)

Note that the shape of this histogram looks similar to the previous

one that was created using the default of 30 bins. The distribution of

elevation values for our Digital Surface Model (DSM) looks

reasonable. It is likely there are no bad data values in this particular

raster.

Challenge: Explore Raster Metadata

Use describe() to determine the following about the

NEON-DS-Airborne-Remote-Sensing/HARV/DSM/HARV_DSMhill.tif

file:

- Does this file have the same CRS as

DSM_HARV? - What is the

NoDataValue? - What is resolution of the raster data?

- How large would a 5x5 pixel area be on the Earth’s surface?

- Is the file a multi- or single-band raster?

Notice: this file is a hillshade. We will learn about hillshades in the Working with Multi-band Rasters in R episode.

R

describe("data/NEON-DS-Airborne-Remote-Sensing/HARV/DSM/HARV_DSMhill.tif")

OUTPUT

[1] "Driver: GTiff/GeoTIFF"

[2] "Files: data/NEON-DS-Airborne-Remote-Sensing/HARV/DSM/HARV_DSMhill.tif"

[3] "Size is 1697, 1367"

[4] "Coordinate System is:"

[5] "PROJCRS[\"WGS 84 / UTM zone 18N\","

[6] " BASEGEOGCRS[\"WGS 84\","

[7] " DATUM[\"World Geodetic System 1984\","

[8] " ELLIPSOID[\"WGS 84\",6378137,298.257223563,"

[9] " LENGTHUNIT[\"metre\",1]]],"

[10] " PRIMEM[\"Greenwich\",0,"

[11] " ANGLEUNIT[\"degree\",0.0174532925199433]],"

[12] " ID[\"EPSG\",4326]],"

[13] " CONVERSION[\"UTM zone 18N\","

[14] " METHOD[\"Transverse Mercator\","

[15] " ID[\"EPSG\",9807]],"

[16] " PARAMETER[\"Latitude of natural origin\",0,"

[17] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[18] " ID[\"EPSG\",8801]],"

[19] " PARAMETER[\"Longitude of natural origin\",-75,"

[20] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[21] " ID[\"EPSG\",8802]],"

[22] " PARAMETER[\"Scale factor at natural origin\",0.9996,"

[23] " SCALEUNIT[\"unity\",1],"

[24] " ID[\"EPSG\",8805]],"

[25] " PARAMETER[\"False easting\",500000,"

[26] " LENGTHUNIT[\"metre\",1],"

[27] " ID[\"EPSG\",8806]],"

[28] " PARAMETER[\"False northing\",0,"

[29] " LENGTHUNIT[\"metre\",1],"

[30] " ID[\"EPSG\",8807]]],"

[31] " CS[Cartesian,2],"

[32] " AXIS[\"(E)\",east,"

[33] " ORDER[1],"

[34] " LENGTHUNIT[\"metre\",1]],"

[35] " AXIS[\"(N)\",north,"

[36] " ORDER[2],"

[37] " LENGTHUNIT[\"metre\",1]],"

[38] " USAGE["

[39] " SCOPE[\"Engineering survey, topographic mapping.\"],"

[40] " AREA[\"Between 78°W and 72°W, northern hemisphere between equator and 84°N, onshore and offshore. Bahamas. Canada - Nunavut; Ontario; Quebec. Colombia. Cuba. Ecuador. Greenland. Haiti. Jamica. Panama. Turks and Caicos Islands. United States (USA). Venezuela.\"],"

[41] " BBOX[0,-78,84,-72]],"

[42] " ID[\"EPSG\",32618]]"

[43] "Data axis to CRS axis mapping: 1,2"

[44] "Origin = (731453.000000000000000,4713838.000000000000000)"

[45] "Pixel Size = (1.000000000000000,-1.000000000000000)"

[46] "Metadata:"

[47] " AREA_OR_POINT=Area"

[48] "Image Structure Metadata:"

[49] " COMPRESSION=LZW"

[50] " INTERLEAVE=BAND"

[51] "Corner Coordinates:"

[52] "Upper Left ( 731453.000, 4713838.000) ( 72d10'52.71\"W, 42d32'32.18\"N)"

[53] "Lower Left ( 731453.000, 4712471.000) ( 72d10'54.71\"W, 42d31'47.92\"N)"

[54] "Upper Right ( 733150.000, 4713838.000) ( 72d 9'38.40\"W, 42d32'30.35\"N)"

[55] "Lower Right ( 733150.000, 4712471.000) ( 72d 9'40.41\"W, 42d31'46.08\"N)"

[56] "Center ( 732301.500, 4713154.500) ( 72d10'16.56\"W, 42d32' 9.13\"N)"

[57] "Band 1 Block=1697x1 Type=Float64, ColorInterp=Gray"

[58] " Min=-0.714 Max=1.000 "

[59] " Minimum=-0.714, Maximum=1.000, Mean=0.313, StdDev=0.481"

[60] " NoData Value=-9999"

[61] " Metadata:"

[62] " STATISTICS_MAXIMUM=0.99999973665016"

[63] " STATISTICS_MEAN=0.31255246777216"

[64] " STATISTICS_MINIMUM=-0.71362979358008"

[65] " STATISTICS_STDDEV=0.48129385401108" - If this file has the same CRS as DSM_HARV? Yes: UTM Zone 18, WGS84, meters.

- What format

NoDataValuestake? -9999 - The resolution of the raster data? 1x1

- How large a 5x5 pixel area would be? 5mx5m How? We are given resolution of 1x1 and units in meters, therefore resolution of 5x5 means 5x5m.

- Is the file a multi- or single-band raster? Single.

More Resources

Key Points

- The GeoTIFF file format includes metadata about the raster data.

- To plot raster data with the

ggplot2package, we need to convert it to a dataframe. - R stores CRS information in the Proj4 format.

- Be careful when dealing with missing or bad data values.

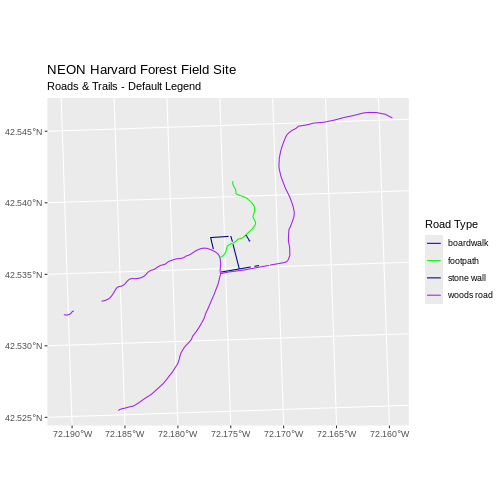

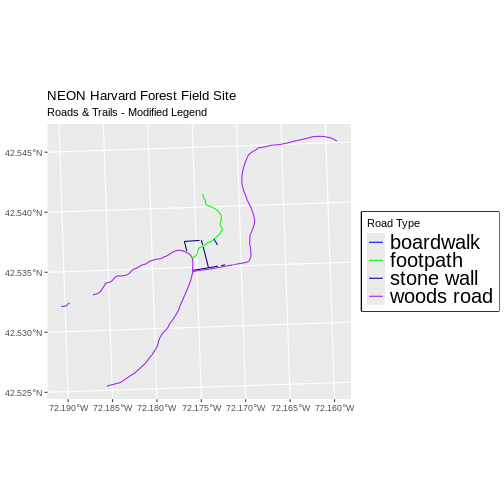

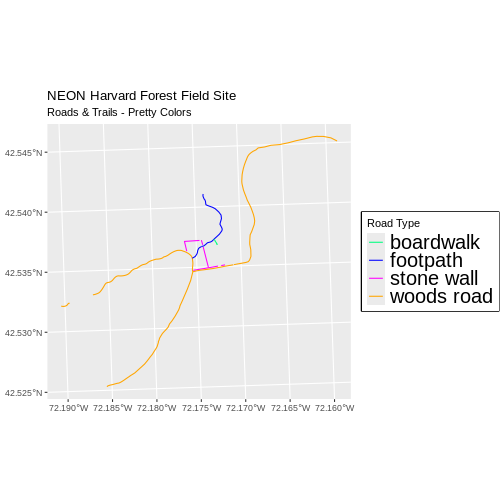

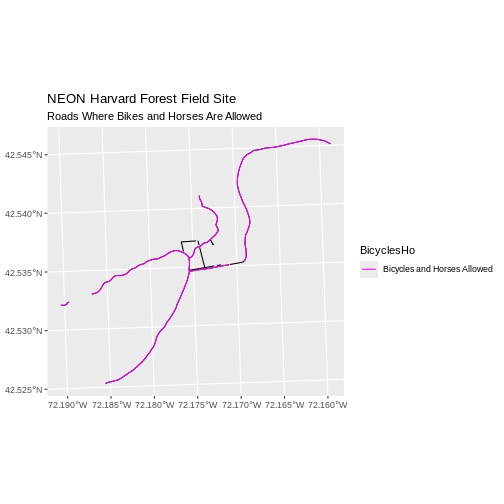

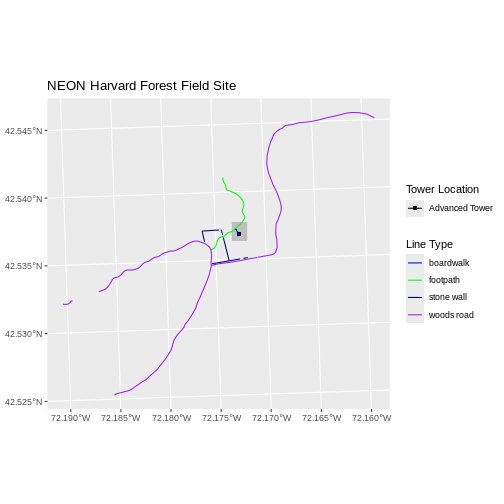

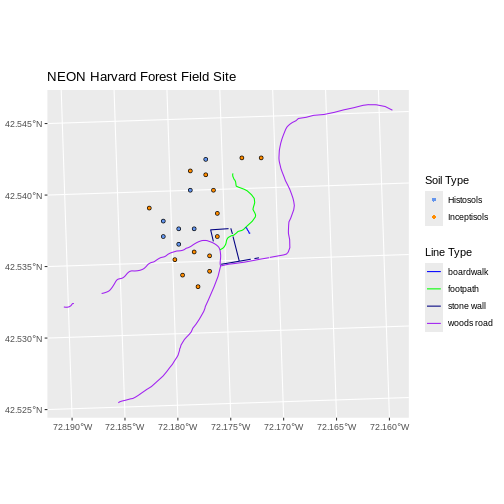

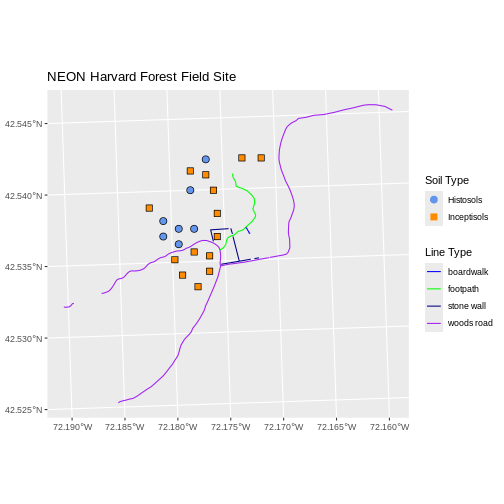

Content from Plot Raster Data

Last updated on 2024-10-15 | Edit this page

Estimated time: 70 minutes

Overview

Questions

- How can I create categorized or customized maps of raster data?

- How can I customize the color scheme of a raster image?

- How can I layer raster data in a single image?

Objectives

- Build customized plots for a single band raster using the

ggplot2package. - Layer a raster dataset on top of a hillshade to create an elegant basemap.

Things You’ll Need To Complete This Episode

See the lesson homepage for detailed information about the software, data, and other prerequisites you will need to work through the examples in this episode.

Plot Raster Data in R

This episode covers how to plot a raster in R using the

ggplot2 package with customized coloring schemes. It also

covers how to layer a raster on top of a hillshade to produce an

eloquent map. We will continue working with the Digital Surface Model

(DSM) raster for the NEON Harvard Forest Field Site.

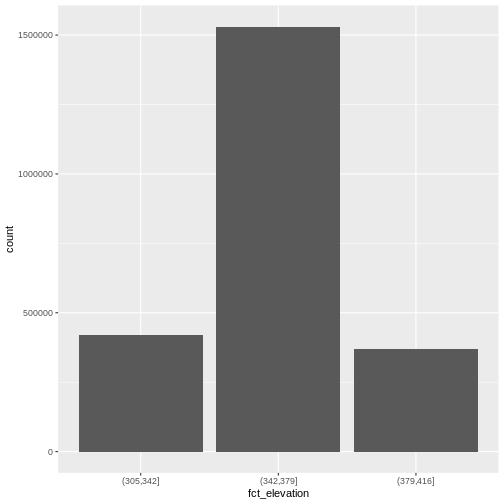

Plotting Data Using Breaks

In the previous episode, we viewed our data using a continuous color

ramp. For clarity and visibility of the plot, we may prefer to view the

data “symbolized” or colored according to ranges of values. This is

comparable to a “classified” map. To do this, we need to tell

ggplot how many groups to break our data into, and where

those breaks should be. To make these decisions, it is useful to first

explore the distribution of the data using a bar plot. To begin with, we

will use dplyr’s mutate() function combined

with cut() to split the data into 3 bins.

R

DSM_HARV_df <- DSM_HARV_df %>%

mutate(fct_elevation = cut(HARV_dsmCrop, breaks = 3))

ggplot() +

geom_bar(data = DSM_HARV_df, aes(fct_elevation))

If we want to know the cutoff values for the groups, we can ask for

the unique values of fct_elevation:

R

unique(DSM_HARV_df$fct_elevation)

OUTPUT

[1] (379,416] (342,379] (305,342]

Levels: (305,342] (342,379] (379,416]And we can get the count of values in each group using

dplyr’s count() function:

R

DSM_HARV_df %>%

count(fct_elevation)

OUTPUT

fct_elevation n

1 (305,342] 418891

2 (342,379] 1530073

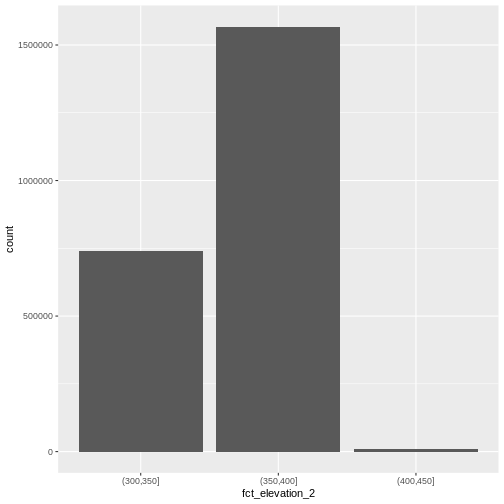

3 (379,416] 370835We might prefer to customize the cutoff values for these groups. Lets

round the cutoff values so that we have groups for the ranges of 301–350

m, 351–400 m, and 401–450 m. To implement this we will give

mutate() a numeric vector of break points instead of the

number of breaks we want.

R

custom_bins <- c(300, 350, 400, 450)

DSM_HARV_df <- DSM_HARV_df %>%

mutate(fct_elevation_2 = cut(HARV_dsmCrop, breaks = custom_bins))

unique(DSM_HARV_df$fct_elevation_2)

OUTPUT

[1] (400,450] (350,400] (300,350]

Levels: (300,350] (350,400] (400,450]Data Tips

Note that when we assign break values a set of 4 values will result in 3 bins of data.

The bin intervals are shown using ( to mean exclusive

and ] to mean inclusive. For example:

(305, 342] means “from 306 through 342”.

And now we can plot our bar plot again, using the new groups:

R

ggplot() +

geom_bar(data = DSM_HARV_df, aes(fct_elevation_2))

And we can get the count of values in each group in the same way we did before:

R

DSM_HARV_df %>%

count(fct_elevation_2)

OUTPUT

fct_elevation_2 n

1 (300,350] 741815

2 (350,400] 1567316

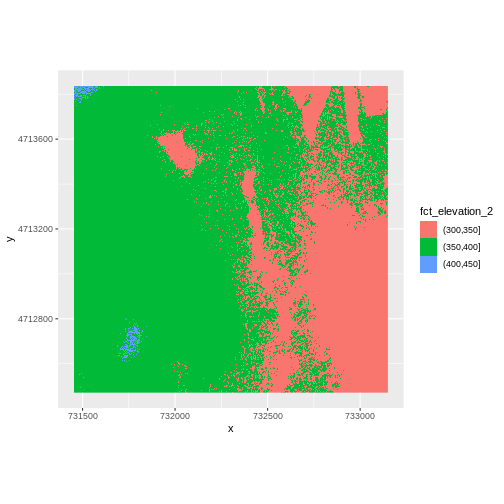

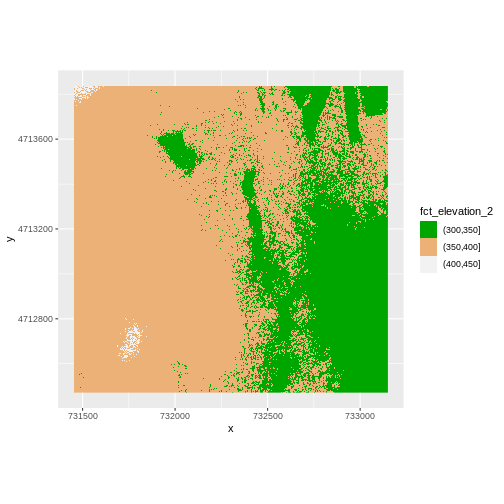

3 (400,450] 10668We can use those groups to plot our raster data, with each group being a different color:

R

ggplot() +

geom_raster(data = DSM_HARV_df , aes(x = x, y = y, fill = fct_elevation_2)) +

coord_quickmap()

The plot above uses the default colors inside ggplot for

raster objects. We can specify our own colors to make the plot look a

little nicer. R has a built in set of colors for plotting terrain, which

are built in to the terrain.colors() function. Since we

have three bins, we want to create a 3-color palette:

R

terrain.colors(3)

OUTPUT

[1] "#00A600" "#ECB176" "#F2F2F2"The terrain.colors() function returns hex

colors - each of these character strings represents a color. To use

these in our map, we pass them across using the

scale_fill_manual() function.

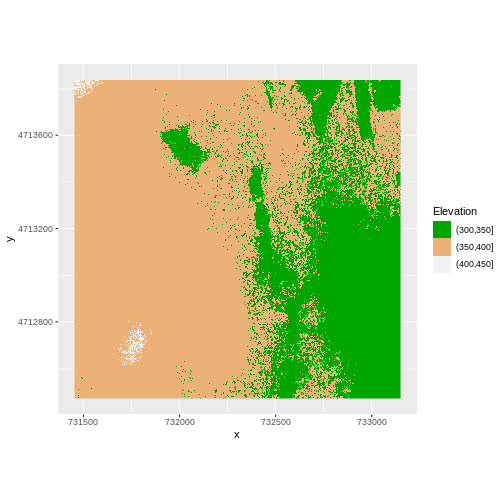

R

ggplot() +

geom_raster(data = DSM_HARV_df , aes(x = x, y = y,

fill = fct_elevation_2)) +

scale_fill_manual(values = terrain.colors(3)) +

coord_quickmap()

More Plot Formatting

If we need to create multiple plots using the same color palette, we

can create an R object (my_col) for the set of colors that

we want to use. We can then quickly change the palette across all plots

by modifying the my_col object, rather than each individual

plot.

We can label the x- and y-axes of our plot too using

xlab and ylab. We can also give the legend a

more meaningful title by passing a value to the name

argument of the scale_fill_manual() function.

R

my_col <- terrain.colors(3)

ggplot() +

geom_raster(data = DSM_HARV_df , aes(x = x, y = y,

fill = fct_elevation_2)) +

scale_fill_manual(values = my_col, name = "Elevation") +

coord_quickmap()

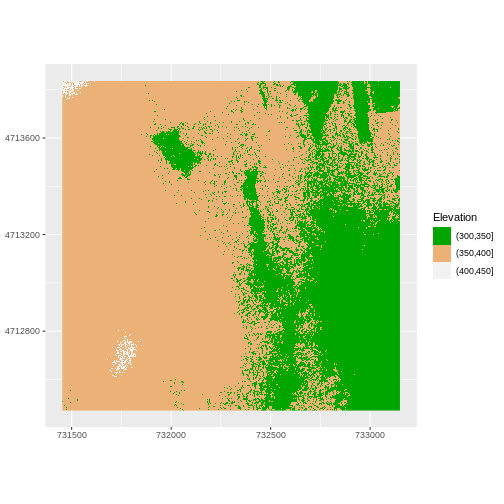

Or we can also turn off the labels of both axes by passing

element_blank() to the relevant part of the

theme() function.

R

ggplot() +

geom_raster(data = DSM_HARV_df , aes(x = x, y = y,

fill = fct_elevation_2)) +

scale_fill_manual(values = my_col, name = "Elevation") +

theme(axis.title = element_blank()) +

coord_quickmap()

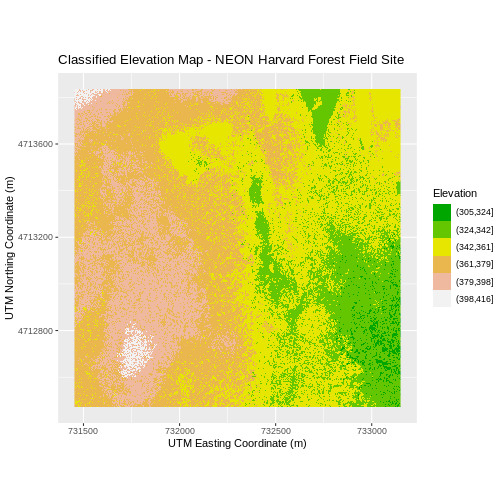

Challenge: Plot Using Custom Breaks

Create a plot of the Harvard Forest Digital Surface Model (DSM) that has:

- Six classified ranges of values (break points) that are evenly divided among the range of pixel values.

- Axis labels.

- A plot title.

R

DSM_HARV_df <- DSM_HARV_df %>%

mutate(fct_elevation_6 = cut(HARV_dsmCrop, breaks = 6))

my_col <- terrain.colors(6)

ggplot() +

geom_raster(data = DSM_HARV_df , aes(x = x, y = y,

fill = fct_elevation_6)) +

scale_fill_manual(values = my_col, name = "Elevation") +

ggtitle("Classified Elevation Map - NEON Harvard Forest Field Site") +

xlab("UTM Easting Coordinate (m)") +

ylab("UTM Northing Coordinate (m)") +

coord_quickmap()

Layering Rasters

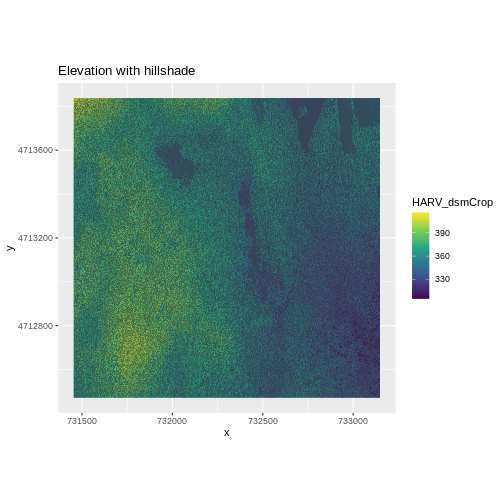

We can layer a raster on top of a hillshade raster for the same area, and use a transparency factor to create a 3-dimensional shaded effect. A hillshade is a raster that maps the shadows and texture that you would see from above when viewing terrain. We will add a custom color, making the plot grey.

First we need to read in our DSM hillshade data and view the structure:

R

DSM_hill_HARV <-

rast("data/NEON-DS-Airborne-Remote-Sensing/HARV/DSM/HARV_DSMhill.tif")

DSM_hill_HARV

OUTPUT

class : SpatRaster

dimensions : 1367, 1697, 1 (nrow, ncol, nlyr)

resolution : 1, 1 (x, y)

extent : 731453, 733150, 4712471, 4713838 (xmin, xmax, ymin, ymax)

coord. ref. : WGS 84 / UTM zone 18N (EPSG:32618)

source : HARV_DSMhill.tif

name : HARV_DSMhill

min value : -0.7136298

max value : 0.9999997 Next we convert it to a dataframe, so that we can plot it using

ggplot2:

R

DSM_hill_HARV_df <- as.data.frame(DSM_hill_HARV, xy = TRUE)

str(DSM_hill_HARV_df)

OUTPUT

'data.frame': 2313675 obs. of 3 variables:

$ x : num 731454 731456 731456 731458 731458 ...

$ y : num 4713836 4713836 4713836 4713836 4713836 ...

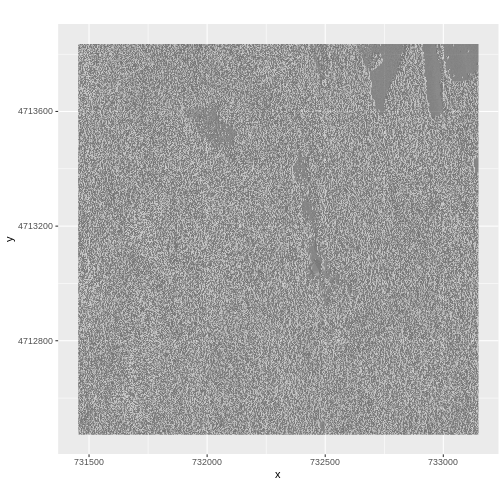

$ HARV_DSMhill: num -0.15567 0.00743 0.86989 0.9791 0.96283 ...Now we can plot the hillshade data:

R

ggplot() +

geom_raster(data = DSM_hill_HARV_df,

aes(x = x, y = y, alpha = HARV_DSMhill)) +

scale_alpha(range = c(0.15, 0.65), guide = "none") +

coord_quickmap()

Data Tips

Turn off, or hide, the legend on a plot by adding

guide = "none" to a scale_something() function

or by setting theme(legend.position = "none").

The alpha value determines how transparent the colors will be (0 being transparent, 1 being opaque).

We can layer another raster on top of our hillshade by adding another

call to the geom_raster() function. Let’s overlay

DSM_HARV on top of the hill_HARV.

R

ggplot() +

geom_raster(data = DSM_HARV_df ,

aes(x = x, y = y,

fill = HARV_dsmCrop)) +

geom_raster(data = DSM_hill_HARV_df,

aes(x = x, y = y,

alpha = HARV_DSMhill)) +

scale_fill_viridis_c() +

scale_alpha(range = c(0.15, 0.65), guide = "none") +

ggtitle("Elevation with hillshade") +

coord_quickmap()

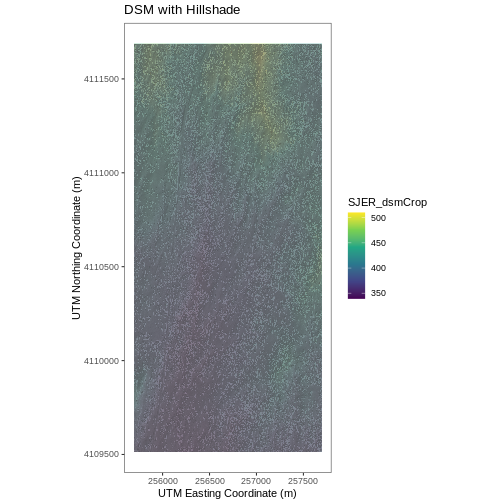

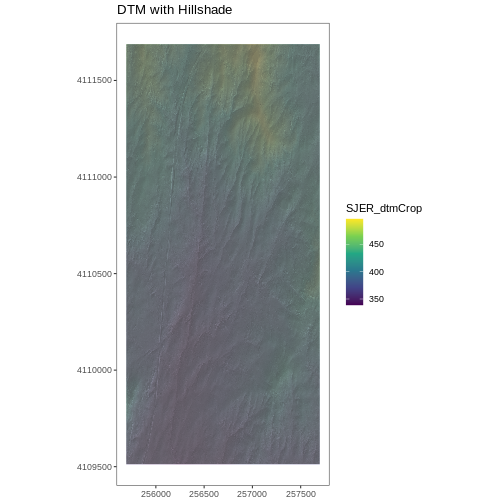

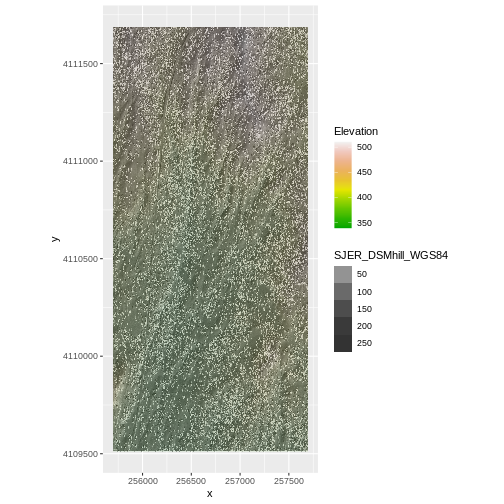

Challenge: Create DTM & DSM for SJER

Use the files in the

data/NEON-DS-Airborne-Remote-Sensing/SJER/ directory to

create a Digital Terrain Model map and Digital Surface Model map of the

San Joaquin Experimental Range field site.

Make sure to:

- include hillshade in the maps,

- label axes on the DSM map and exclude them from the DTM map,

- include a title for each map,

- experiment with various alpha values and color palettes to represent the data.

R

# CREATE DSM MAPS

# import DSM data

DSM_SJER <-

rast("data/NEON-DS-Airborne-Remote-Sensing/SJER/DSM/SJER_dsmCrop.tif")

# convert to a df for plotting

DSM_SJER_df <- as.data.frame(DSM_SJER, xy = TRUE)

# import DSM hillshade

DSM_hill_SJER <-

rast("data/NEON-DS-Airborne-Remote-Sensing/SJER/DSM/SJER_dsmHill.tif")

# convert to a df for plotting

DSM_hill_SJER_df <- as.data.frame(DSM_hill_SJER, xy = TRUE)

# Build Plot

ggplot() +

geom_raster(data = DSM_SJER_df ,

aes(x = x, y = y,

fill = SJER_dsmCrop,

alpha = 0.8)

) +

geom_raster(data = DSM_hill_SJER_df,

aes(x = x, y = y,

alpha = SJER_dsmHill)

) +

scale_fill_viridis_c() +

guides(fill = guide_colorbar()) +

scale_alpha(range = c(0.4, 0.7), guide = "none") +

# remove grey background and grid lines

theme_bw() +

theme(panel.grid.major = element_blank(),

panel.grid.minor = element_blank()) +

xlab("UTM Easting Coordinate (m)") +

ylab("UTM Northing Coordinate (m)") +

ggtitle("DSM with Hillshade") +

coord_quickmap()

R

# CREATE DTM MAP

# import DTM

DTM_SJER <-

rast("data/NEON-DS-Airborne-Remote-Sensing/SJER/DTM/SJER_dtmCrop.tif")

DTM_SJER_df <- as.data.frame(DTM_SJER, xy = TRUE)

# DTM Hillshade

DTM_hill_SJER <-

rast("data/NEON-DS-Airborne-Remote-Sensing/SJER/DTM/SJER_dtmHill.tif")

DTM_hill_SJER_df <- as.data.frame(DTM_hill_SJER, xy = TRUE)

ggplot() +

geom_raster(data = DTM_SJER_df ,

aes(x = x, y = y,

fill = SJER_dtmCrop,

alpha = 2.0)

) +

geom_raster(data = DTM_hill_SJER_df,

aes(x = x, y = y,

alpha = SJER_dtmHill)

) +

scale_fill_viridis_c() +

guides(fill = guide_colorbar()) +

scale_alpha(range = c(0.4, 0.7), guide = "none") +

theme_bw() +

theme(panel.grid.major = element_blank(),

panel.grid.minor = element_blank()) +

theme(axis.title.x = element_blank(),

axis.title.y = element_blank()) +

ggtitle("DTM with Hillshade") +

coord_quickmap()

Key Points

- Continuous data ranges can be grouped into categories using

mutate()andcut(). - Use built-in

terrain.colors()or set your preferred color scheme manually. - Layer rasters on top of one another by using the

alphaaesthetic.

Content from Reproject Raster Data

Last updated on 2024-10-15 | Edit this page

Estimated time: 60 minutes

Overview

Questions

- How do I work with raster data sets that are in different projections?

Objectives

- Reproject a raster in R.

Things You’ll Need To Complete This Episode

See the lesson homepage for detailed information about the software, data, and other prerequisites you will need to work through the examples in this episode.

Sometimes we encounter raster datasets that do not “line up” when

plotted or analyzed. Rasters that don’t line up are most often in

different Coordinate Reference Systems (CRS). This episode explains how

to deal with rasters in different, known CRSs. It will walk though

reprojecting rasters in R using the project() function in

the terra package.

Raster Projection in R

In the Plot Raster Data in R episode, we learned how to layer a raster file on top of a hillshade for a nice looking basemap. In that episode, all of our data were in the same CRS. What happens when things don’t line up?

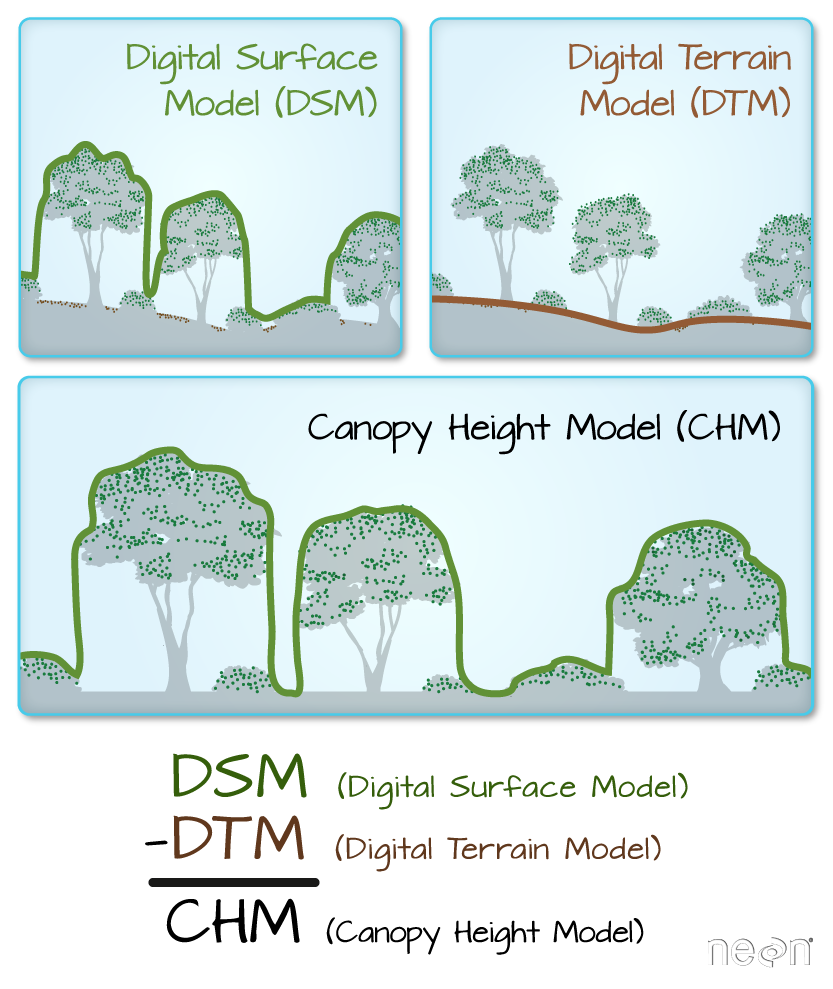

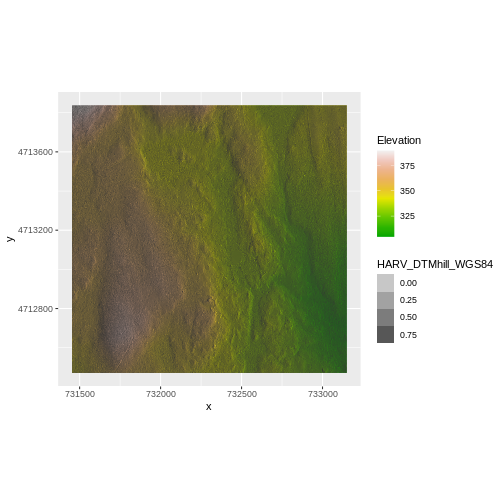

For this episode, we will be working with the Harvard Forest Digital Terrain Model data. This differs from the surface model data we’ve been working with so far in that the digital surface model (DSM) includes the tops of trees, while the digital terrain model (DTM) shows the ground level.

We’ll be looking at another model (the canopy height model) in a later episode and will see how

to calculate the CHM from the DSM and DTM. Here, we will create a map of

the Harvard Forest Digital Terrain Model (DTM_HARV) draped

or layered on top of the hillshade (DTM_hill_HARV). The

hillshade layer maps the terrain using light and shadow to create a

3D-looking image, based on a hypothetical illumination of the ground

level.

First, we need to import the DTM and DTM hillshade data.

R

DTM_HARV <-

rast("data/NEON-DS-Airborne-Remote-Sensing/HARV/DTM/HARV_dtmCrop.tif")

DTM_hill_HARV <-

rast("data/NEON-DS-Airborne-Remote-Sensing/HARV/DTM/HARV_DTMhill_WGS84.tif")

Next, we will convert each of these datasets to a dataframe for

plotting with ggplot.

R

DTM_HARV_df <- as.data.frame(DTM_HARV, xy = TRUE)

DTM_hill_HARV_df <- as.data.frame(DTM_hill_HARV, xy = TRUE)

Now we can create a map of the DTM layered over the hillshade.

R

ggplot() +

geom_raster(data = DTM_HARV_df ,

aes(x = x, y = y,

fill = HARV_dtmCrop)) +

geom_raster(data = DTM_hill_HARV_df,

aes(x = x, y = y,

alpha = HARV_DTMhill_WGS84)) +

scale_fill_gradientn(name = "Elevation", colors = terrain.colors(10)) +

coord_quickmap()

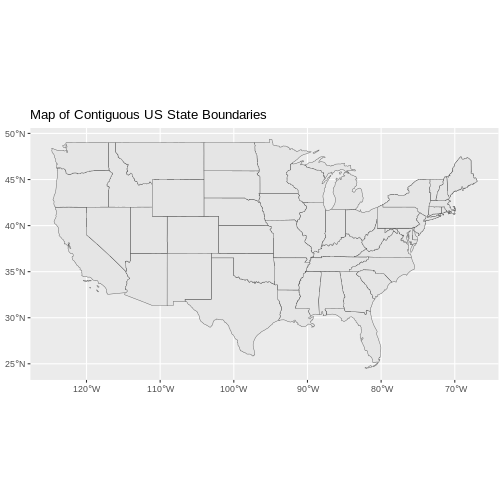

Our results are curious - neither the Digital Terrain Model

(DTM_HARV_df) nor the DTM Hillshade

(DTM_hill_HARV_df) plotted. Let’s try to plot the DTM on

its own to make sure there are data there.

R

ggplot() +

geom_raster(data = DTM_HARV_df,

aes(x = x, y = y,

fill = HARV_dtmCrop)) +

scale_fill_gradientn(name = "Elevation", colors = terrain.colors(10)) +

coord_quickmap()

Our DTM seems to contain data and plots just fine.

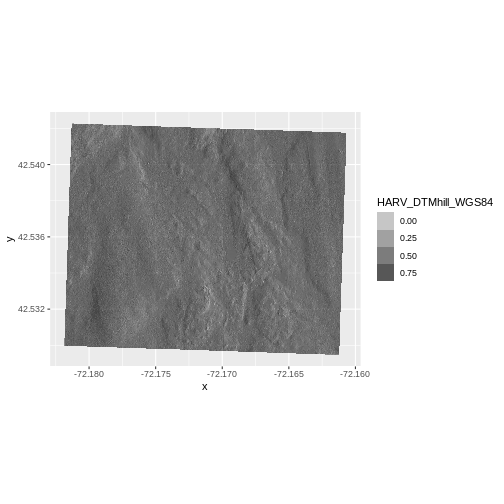

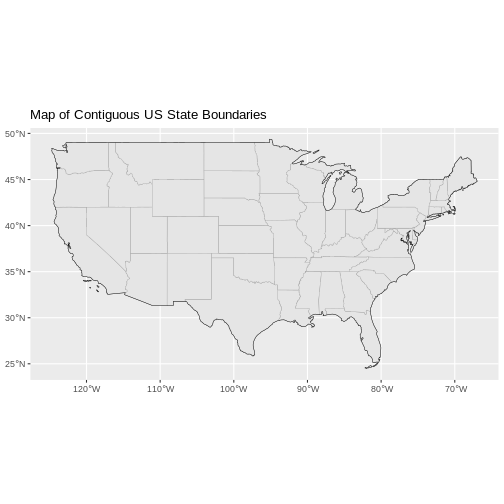

Next we plot the DTM Hillshade on its own to see whether everything is OK.

R

ggplot() +

geom_raster(data = DTM_hill_HARV_df,

aes(x = x, y = y,

alpha = HARV_DTMhill_WGS84)) +

coord_quickmap()

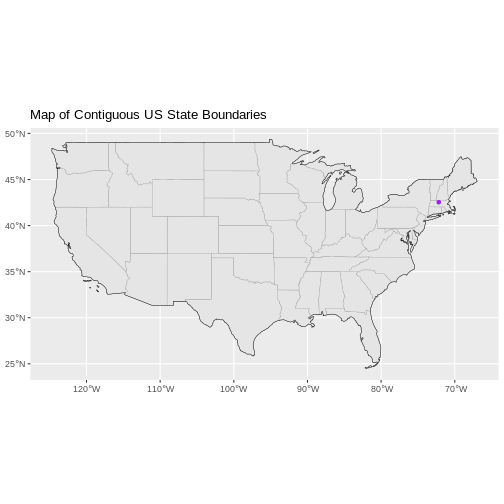

If we look at the axes, we can see that the projections of the two

rasters are different. When this is the case, ggplot won’t

render the image. It won’t even throw an error message to tell you

something has gone wrong. We can look at Coordinate Reference Systems

(CRSs) of the DTM and the hillshade data to see how they differ.

Exercise

View the CRS for each of these two datasets. What projection does each use?

R

# view crs for DTM

crs(DTM_HARV, parse = TRUE)

OUTPUT

[1] "PROJCRS[\"WGS 84 / UTM zone 18N\","

[2] " BASEGEOGCRS[\"WGS 84\","

[3] " DATUM[\"World Geodetic System 1984\","

[4] " ELLIPSOID[\"WGS 84\",6378137,298.257223563,"

[5] " LENGTHUNIT[\"metre\",1]]],"

[6] " PRIMEM[\"Greenwich\",0,"

[7] " ANGLEUNIT[\"degree\",0.0174532925199433]],"

[8] " ID[\"EPSG\",4326]],"

[9] " CONVERSION[\"UTM zone 18N\","

[10] " METHOD[\"Transverse Mercator\","

[11] " ID[\"EPSG\",9807]],"

[12] " PARAMETER[\"Latitude of natural origin\",0,"

[13] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[14] " ID[\"EPSG\",8801]],"

[15] " PARAMETER[\"Longitude of natural origin\",-75,"

[16] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[17] " ID[\"EPSG\",8802]],"

[18] " PARAMETER[\"Scale factor at natural origin\",0.9996,"

[19] " SCALEUNIT[\"unity\",1],"

[20] " ID[\"EPSG\",8805]],"

[21] " PARAMETER[\"False easting\",500000,"

[22] " LENGTHUNIT[\"metre\",1],"

[23] " ID[\"EPSG\",8806]],"

[24] " PARAMETER[\"False northing\",0,"

[25] " LENGTHUNIT[\"metre\",1],"

[26] " ID[\"EPSG\",8807]]],"

[27] " CS[Cartesian,2],"

[28] " AXIS[\"(E)\",east,"

[29] " ORDER[1],"

[30] " LENGTHUNIT[\"metre\",1]],"

[31] " AXIS[\"(N)\",north,"

[32] " ORDER[2],"

[33] " LENGTHUNIT[\"metre\",1]],"

[34] " USAGE["

[35] " SCOPE[\"Engineering survey, topographic mapping.\"],"

[36] " AREA[\"Between 78°W and 72°W, northern hemisphere between equator and 84°N, onshore and offshore. Bahamas. Canada - Nunavut; Ontario; Quebec. Colombia. Cuba. Ecuador. Greenland. Haiti. Jamica. Panama. Turks and Caicos Islands. United States (USA). Venezuela.\"],"

[37] " BBOX[0,-78,84,-72]],"

[38] " ID[\"EPSG\",32618]]" R

# view crs for hillshade

crs(DTM_hill_HARV, parse = TRUE)

OUTPUT

[1] "GEOGCRS[\"WGS 84\","

[2] " DATUM[\"World Geodetic System 1984\","

[3] " ELLIPSOID[\"WGS 84\",6378137,298.257223563,"

[4] " LENGTHUNIT[\"metre\",1]]],"

[5] " PRIMEM[\"Greenwich\",0,"

[6] " ANGLEUNIT[\"degree\",0.0174532925199433]],"

[7] " CS[ellipsoidal,2],"

[8] " AXIS[\"geodetic latitude (Lat)\",north,"

[9] " ORDER[1],"

[10] " ANGLEUNIT[\"degree\",0.0174532925199433]],"

[11] " AXIS[\"geodetic longitude (Lon)\",east,"

[12] " ORDER[2],"

[13] " ANGLEUNIT[\"degree\",0.0174532925199433]],"

[14] " ID[\"EPSG\",4326]]" DTM_HARV is in the UTM projection, with units of meters.

DTM_hill_HARV is in Geographic WGS84 - which

is represented by latitude and longitude values.

Because the two rasters are in different CRSs, they don’t line up

when plotted in R. We need to reproject (or change the projection of)

DTM_hill_HARV into the UTM CRS. Alternatively, we could

reproject DTM_HARV into WGS84.

Reproject Rasters

We can use the project() function to reproject a raster

into a new CRS. Keep in mind that reprojection only works when you first

have a defined CRS for the raster object that you want to reproject. It

cannot be used if no CRS is defined. Lucky for us, the

DTM_hill_HARV has a defined CRS.

Data Tip

When we reproject a raster, we move it from one “grid” to another. Thus, we are modifying the data! Keep this in mind as we work with raster data.

To use the project() function, we need to define two

things:

- the object we want to reproject and

- the CRS that we want to reproject it to.

The syntax is project(RasterObject, crs)

We want the CRS of our hillshade to match the DTM_HARV

raster. We can thus assign the CRS of our DTM_HARV to our

hillshade within the project() function as follows:

crs(DTM_HARV). Note that we are using the

project() function on the raster object, not the

data.frame() we use for plotting with

ggplot.

First we will reproject our DTM_hill_HARV raster data to

match the DTM_HARV raster CRS:

R

DTM_hill_UTMZ18N_HARV <- project(DTM_hill_HARV,

crs(DTM_HARV))

Now we can compare the CRS of our original DTM hillshade and our new DTM hillshade, to see how they are different.

R

crs(DTM_hill_UTMZ18N_HARV, parse = TRUE)

OUTPUT

[1] "PROJCRS[\"WGS 84 / UTM zone 18N\","

[2] " BASEGEOGCRS[\"WGS 84\","

[3] " DATUM[\"World Geodetic System 1984\","

[4] " ELLIPSOID[\"WGS 84\",6378137,298.257223563,"

[5] " LENGTHUNIT[\"metre\",1]]],"

[6] " PRIMEM[\"Greenwich\",0,"

[7] " ANGLEUNIT[\"degree\",0.0174532925199433]],"

[8] " ID[\"EPSG\",4326]],"

[9] " CONVERSION[\"UTM zone 18N\","

[10] " METHOD[\"Transverse Mercator\","

[11] " ID[\"EPSG\",9807]],"

[12] " PARAMETER[\"Latitude of natural origin\",0,"

[13] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[14] " ID[\"EPSG\",8801]],"

[15] " PARAMETER[\"Longitude of natural origin\",-75,"

[16] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[17] " ID[\"EPSG\",8802]],"

[18] " PARAMETER[\"Scale factor at natural origin\",0.9996,"

[19] " SCALEUNIT[\"unity\",1],"

[20] " ID[\"EPSG\",8805]],"

[21] " PARAMETER[\"False easting\",500000,"

[22] " LENGTHUNIT[\"metre\",1],"

[23] " ID[\"EPSG\",8806]],"

[24] " PARAMETER[\"False northing\",0,"

[25] " LENGTHUNIT[\"metre\",1],"

[26] " ID[\"EPSG\",8807]]],"

[27] " CS[Cartesian,2],"

[28] " AXIS[\"(E)\",east,"

[29] " ORDER[1],"

[30] " LENGTHUNIT[\"metre\",1]],"

[31] " AXIS[\"(N)\",north,"

[32] " ORDER[2],"

[33] " LENGTHUNIT[\"metre\",1]],"

[34] " USAGE["

[35] " SCOPE[\"Engineering survey, topographic mapping.\"],"

[36] " AREA[\"Between 78°W and 72°W, northern hemisphere between equator and 84°N, onshore and offshore. Bahamas. Canada - Nunavut; Ontario; Quebec. Colombia. Cuba. Ecuador. Greenland. Haiti. Jamica. Panama. Turks and Caicos Islands. United States (USA). Venezuela.\"],"

[37] " BBOX[0,-78,84,-72]],"

[38] " ID[\"EPSG\",32618]]" R

crs(DTM_hill_HARV, parse = TRUE)

OUTPUT

[1] "GEOGCRS[\"WGS 84\","

[2] " DATUM[\"World Geodetic System 1984\","

[3] " ELLIPSOID[\"WGS 84\",6378137,298.257223563,"

[4] " LENGTHUNIT[\"metre\",1]]],"

[5] " PRIMEM[\"Greenwich\",0,"

[6] " ANGLEUNIT[\"degree\",0.0174532925199433]],"

[7] " CS[ellipsoidal,2],"

[8] " AXIS[\"geodetic latitude (Lat)\",north,"

[9] " ORDER[1],"

[10] " ANGLEUNIT[\"degree\",0.0174532925199433]],"

[11] " AXIS[\"geodetic longitude (Lon)\",east,"

[12] " ORDER[2],"

[13] " ANGLEUNIT[\"degree\",0.0174532925199433]],"

[14] " ID[\"EPSG\",4326]]" We can also compare the extent of the two objects.

R

ext(DTM_hill_UTMZ18N_HARV)

OUTPUT

SpatExtent : 731402.31567604, 733200.22199435, 4712407.19751409, 4713901.78222079 (xmin, xmax, ymin, ymax)R

ext(DTM_hill_HARV)

OUTPUT

SpatExtent : -72.1819236223343, -72.1606102223342, 42.5294079700285, 42.5423355900285 (xmin, xmax, ymin, ymax)Notice in the output above that the crs() of

DTM_hill_UTMZ18N_HARV is now UTM. However, the extent

values of DTM_hillUTMZ18N_HARV are different from

DTM_hill_HARV.

Challenge: Extent Change with CRS Change

Why do you think the two extents differ?

The extent for DTM_hill_UTMZ18N_HARV is in UTMs so the extent is in meters. The extent for DTM_hill_HARV is in lat/long so the extent is expressed in decimal degrees.

Deal with Raster Resolution

Let’s next have a look at the resolution of our reprojected hillshade versus our original data.

R

res(DTM_hill_UTMZ18N_HARV)

OUTPUT

[1] 1.001061 1.001061R

res(DTM_HARV)

OUTPUT

[1] 1 1These two resolutions are different, but they’re representing the

same data. We can tell R to force our newly reprojected raster to be 1m

x 1m resolution by adding a line of code res=1 within the

project() function. In the example below, we ensure a

resolution match by using res(DTM_HARV) as a variable.

R

DTM_hill_UTMZ18N_HARV <- project(DTM_hill_HARV,

crs(DTM_HARV),

res = res(DTM_HARV))

Now both our resolutions and our CRSs match, so we can plot these two data sets together. Let’s double-check our resolution to be sure:

R

res(DTM_hill_UTMZ18N_HARV)

OUTPUT

[1] 1 1R

res(DTM_HARV)

OUTPUT

[1] 1 1For plotting with ggplot(), we will need to create a

dataframe from our newly reprojected raster.

R

DTM_hill_HARV_2_df <- as.data.frame(DTM_hill_UTMZ18N_HARV, xy = TRUE)

We can now create a plot of this data.

R

ggplot() +

geom_raster(data = DTM_HARV_df ,

aes(x = x, y = y,

fill = HARV_dtmCrop)) +

geom_raster(data = DTM_hill_HARV_2_df,

aes(x = x, y = y,

alpha = HARV_DTMhill_WGS84)) +

scale_fill_gradientn(name = "Elevation", colors = terrain.colors(10)) +

coord_quickmap()

We have now successfully draped the Digital Terrain Model on top of our hillshade to produce a nice looking, textured map!

Challenge: Reproject, then Plot a Digital Terrain Model

Create a map of the San

Joaquin Experimental Range field site using the

SJER_DSMhill_WGS84.tif and SJER_dsmCrop.tif

files.

Reproject the data as necessary to make things line up!

R

# import DSM

DSM_SJER <-

rast("data/NEON-DS-Airborne-Remote-Sensing/SJER/DSM/SJER_dsmCrop.tif")

# import DSM hillshade

DSM_hill_SJER_WGS <-

rast("data/NEON-DS-Airborne-Remote-Sensing/SJER/DSM/SJER_DSMhill_WGS84.tif")

# reproject raster

DSM_hill_UTMZ18N_SJER <- project(DSM_hill_SJER_WGS,

crs(DSM_SJER),

res = 1)

# convert to data.frames

DSM_SJER_df <- as.data.frame(DSM_SJER, xy = TRUE)

DSM_hill_SJER_df <- as.data.frame(DSM_hill_UTMZ18N_SJER, xy = TRUE)

ggplot() +

geom_raster(data = DSM_hill_SJER_df,

aes(x = x, y = y,

alpha = SJER_DSMhill_WGS84)

) +

geom_raster(data = DSM_SJER_df,

aes(x = x, y = y,

fill = SJER_dsmCrop,

alpha=0.8)

) +

scale_fill_gradientn(name = "Elevation", colors = terrain.colors(10)) +

coord_quickmap()

Challenge: Reproject, then Plot a Digital Terrain Model (continued)

If you completed the San Joaquin plotting challenge in the Plot Raster Data in R episode, how does the map you just created compare to that map?

The maps look identical. Which is what they should be as the only difference is this one was reprojected from WGS84 to UTM prior to plotting.

Key Points

- In order to plot two raster data sets together, they must be in the same CRS.

- Use the

project()function to convert between CRSs.

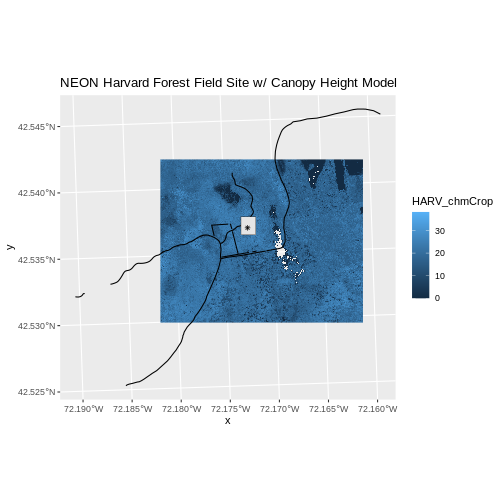

Content from Raster Calculations

Last updated on 2024-10-15 | Edit this page

Estimated time: 60 minutes

Overview

Questions

- How do I subtract one raster from another and extract pixel values for defined locations?

Objectives

- Perform a subtraction between two rasters using raster math.

- Perform a more efficient subtraction between two rasters using the

raster

lapp()function. - Export raster data as a GeoTIFF file.

Things You’ll Need To Complete This Episode

See the lesson homepage for detailed information about the software, data, and other prerequisites you will need to work through the examples in this episode.

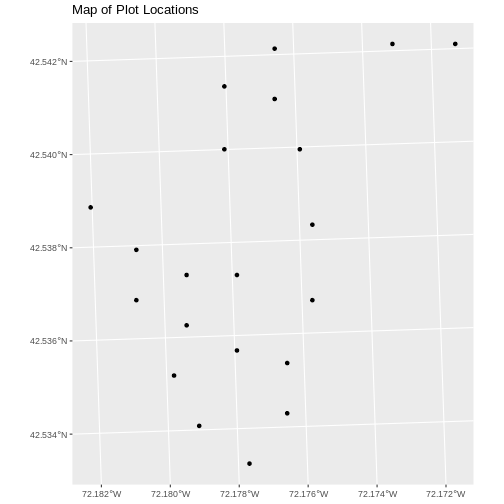

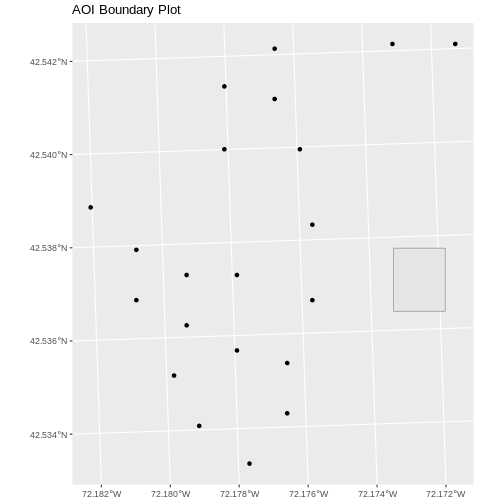

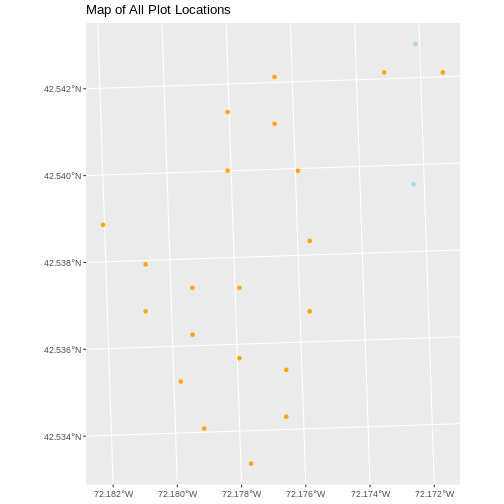

We often want to combine values of and perform calculations on

rasters to create a new output raster. This episode covers how to

subtract one raster from another using basic raster math and the

lapp() function. It also covers how to extract pixel values

from a set of locations - for example a buffer region around plot

locations at a field site.

Raster Calculations in R

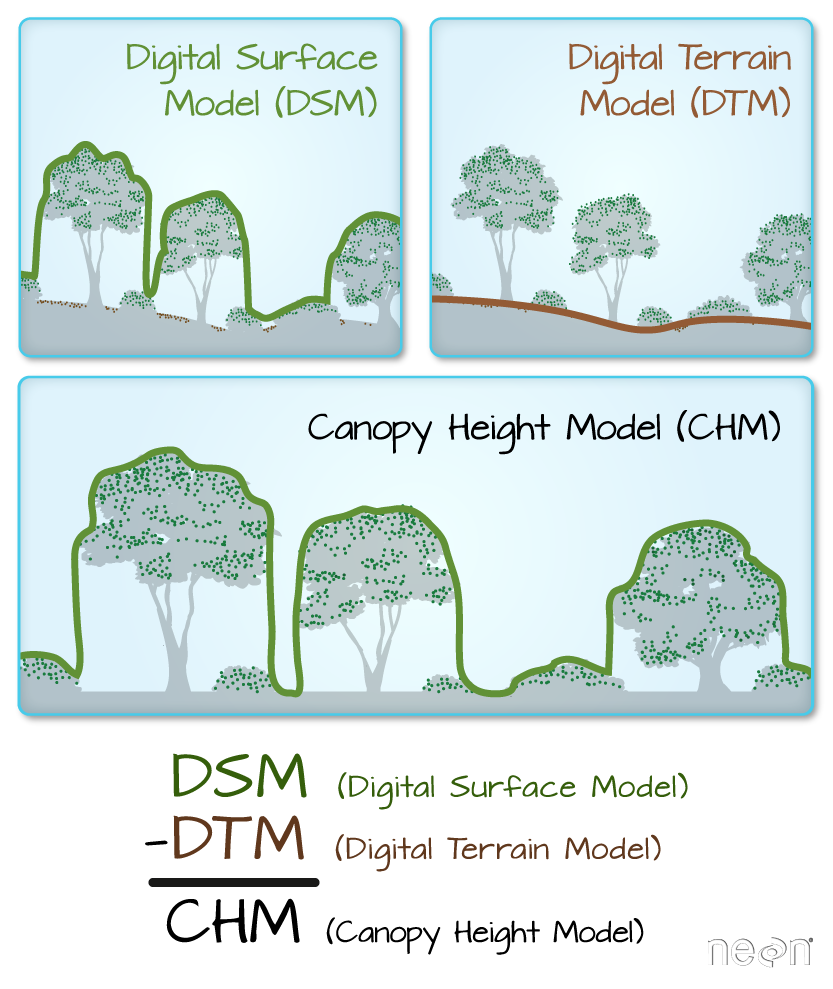

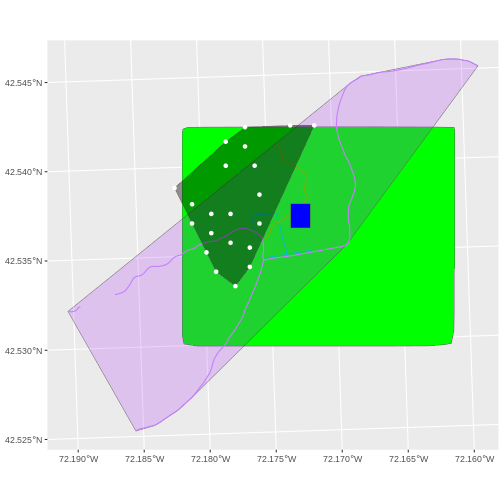

We often want to perform calculations on two or more rasters to create a new output raster. For example, if we are interested in mapping the heights of trees across an entire field site, we might want to calculate the difference between the Digital Surface Model (DSM, tops of trees) and the Digital Terrain Model (DTM, ground level). The resulting dataset is referred to as a Canopy Height Model (CHM) and represents the actual height of trees, buildings, etc. with the influence of ground elevation removed.

More Resources

- Check out more on LiDAR CHM, DTM and DSM in this NEON Data Skills overview tutorial: What is a CHM, DSM and DTM? About Gridded, Raster LiDAR Data.

Load the Data

For this episode, we will use the DTM and DSM from the NEON Harvard Forest Field site and San Joaquin Experimental Range, which we already have loaded from previous episodes.

Exercise

Use the describe() function to view information about

the DTM and DSM data files. Do the two rasters have the same or

different CRSs and resolutions? Do they both have defined minimum and

maximum values?

R

describe("data/NEON-DS-Airborne-Remote-Sensing/HARV/DTM/HARV_dtmCrop.tif")

OUTPUT

[1] "Driver: GTiff/GeoTIFF"

[2] "Files: data/NEON-DS-Airborne-Remote-Sensing/HARV/DTM/HARV_dtmCrop.tif"

[3] "Size is 1697, 1367"

[4] "Coordinate System is:"

[5] "PROJCRS[\"WGS 84 / UTM zone 18N\","

[6] " BASEGEOGCRS[\"WGS 84\","

[7] " DATUM[\"World Geodetic System 1984\","

[8] " ELLIPSOID[\"WGS 84\",6378137,298.257223563,"

[9] " LENGTHUNIT[\"metre\",1]]],"

[10] " PRIMEM[\"Greenwich\",0,"

[11] " ANGLEUNIT[\"degree\",0.0174532925199433]],"

[12] " ID[\"EPSG\",4326]],"

[13] " CONVERSION[\"UTM zone 18N\","

[14] " METHOD[\"Transverse Mercator\","

[15] " ID[\"EPSG\",9807]],"

[16] " PARAMETER[\"Latitude of natural origin\",0,"

[17] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[18] " ID[\"EPSG\",8801]],"

[19] " PARAMETER[\"Longitude of natural origin\",-75,"

[20] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[21] " ID[\"EPSG\",8802]],"

[22] " PARAMETER[\"Scale factor at natural origin\",0.9996,"

[23] " SCALEUNIT[\"unity\",1],"

[24] " ID[\"EPSG\",8805]],"

[25] " PARAMETER[\"False easting\",500000,"

[26] " LENGTHUNIT[\"metre\",1],"

[27] " ID[\"EPSG\",8806]],"

[28] " PARAMETER[\"False northing\",0,"

[29] " LENGTHUNIT[\"metre\",1],"

[30] " ID[\"EPSG\",8807]]],"

[31] " CS[Cartesian,2],"

[32] " AXIS[\"(E)\",east,"

[33] " ORDER[1],"

[34] " LENGTHUNIT[\"metre\",1]],"

[35] " AXIS[\"(N)\",north,"

[36] " ORDER[2],"

[37] " LENGTHUNIT[\"metre\",1]],"

[38] " USAGE["

[39] " SCOPE[\"Engineering survey, topographic mapping.\"],"

[40] " AREA[\"Between 78°W and 72°W, northern hemisphere between equator and 84°N, onshore and offshore. Bahamas. Canada - Nunavut; Ontario; Quebec. Colombia. Cuba. Ecuador. Greenland. Haiti. Jamica. Panama. Turks and Caicos Islands. United States (USA). Venezuela.\"],"

[41] " BBOX[0,-78,84,-72]],"

[42] " ID[\"EPSG\",32618]]"

[43] "Data axis to CRS axis mapping: 1,2"

[44] "Origin = (731453.000000000000000,4713838.000000000000000)"

[45] "Pixel Size = (1.000000000000000,-1.000000000000000)"

[46] "Metadata:"

[47] " AREA_OR_POINT=Area"

[48] "Image Structure Metadata:"

[49] " COMPRESSION=LZW"

[50] " INTERLEAVE=BAND"

[51] "Corner Coordinates:"

[52] "Upper Left ( 731453.000, 4713838.000) ( 72d10'52.71\"W, 42d32'32.18\"N)"

[53] "Lower Left ( 731453.000, 4712471.000) ( 72d10'54.71\"W, 42d31'47.92\"N)"

[54] "Upper Right ( 733150.000, 4713838.000) ( 72d 9'38.40\"W, 42d32'30.35\"N)"

[55] "Lower Right ( 733150.000, 4712471.000) ( 72d 9'40.41\"W, 42d31'46.08\"N)"

[56] "Center ( 732301.500, 4713154.500) ( 72d10'16.56\"W, 42d32' 9.13\"N)"

[57] "Band 1 Block=1697x1 Type=Float64, ColorInterp=Gray"

[58] " Min=304.560 Max=389.820 "

[59] " Minimum=304.560, Maximum=389.820, Mean=344.898, StdDev=15.861"

[60] " NoData Value=-9999"

[61] " Metadata:"

[62] " STATISTICS_MAXIMUM=389.81997680664"

[63] " STATISTICS_MEAN=344.8979433625"

[64] " STATISTICS_MINIMUM=304.55999755859"

[65] " STATISTICS_STDDEV=15.861471000978" R

describe("data/NEON-DS-Airborne-Remote-Sensing/HARV/DSM/HARV_dsmCrop.tif")

OUTPUT

[1] "Driver: GTiff/GeoTIFF"

[2] "Files: data/NEON-DS-Airborne-Remote-Sensing/HARV/DSM/HARV_dsmCrop.tif"

[3] "Size is 1697, 1367"

[4] "Coordinate System is:"

[5] "PROJCRS[\"WGS 84 / UTM zone 18N\","

[6] " BASEGEOGCRS[\"WGS 84\","

[7] " DATUM[\"World Geodetic System 1984\","

[8] " ELLIPSOID[\"WGS 84\",6378137,298.257223563,"

[9] " LENGTHUNIT[\"metre\",1]]],"

[10] " PRIMEM[\"Greenwich\",0,"

[11] " ANGLEUNIT[\"degree\",0.0174532925199433]],"

[12] " ID[\"EPSG\",4326]],"

[13] " CONVERSION[\"UTM zone 18N\","

[14] " METHOD[\"Transverse Mercator\","

[15] " ID[\"EPSG\",9807]],"

[16] " PARAMETER[\"Latitude of natural origin\",0,"

[17] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[18] " ID[\"EPSG\",8801]],"

[19] " PARAMETER[\"Longitude of natural origin\",-75,"

[20] " ANGLEUNIT[\"degree\",0.0174532925199433],"

[21] " ID[\"EPSG\",8802]],"

[22] " PARAMETER[\"Scale factor at natural origin\",0.9996,"

[23] " SCALEUNIT[\"unity\",1],"

[24] " ID[\"EPSG\",8805]],"

[25] " PARAMETER[\"False easting\",500000,"

[26] " LENGTHUNIT[\"metre\",1],"

[27] " ID[\"EPSG\",8806]],"

[28] " PARAMETER[\"False northing\",0,"

[29] " LENGTHUNIT[\"metre\",1],"

[30] " ID[\"EPSG\",8807]]],"

[31] " CS[Cartesian,2],"

[32] " AXIS[\"(E)\",east,"

[33] " ORDER[1],"

[34] " LENGTHUNIT[\"metre\",1]],"

[35] " AXIS[\"(N)\",north,"

[36] " ORDER[2],"

[37] " LENGTHUNIT[\"metre\",1]],"

[38] " USAGE["

[39] " SCOPE[\"Engineering survey, topographic mapping.\"],"

[40] " AREA[\"Between 78°W and 72°W, northern hemisphere between equator and 84°N, onshore and offshore. Bahamas. Canada - Nunavut; Ontario; Quebec. Colombia. Cuba. Ecuador. Greenland. Haiti. Jamica. Panama. Turks and Caicos Islands. United States (USA). Venezuela.\"],"

[41] " BBOX[0,-78,84,-72]],"

[42] " ID[\"EPSG\",32618]]"

[43] "Data axis to CRS axis mapping: 1,2"

[44] "Origin = (731453.000000000000000,4713838.000000000000000)"

[45] "Pixel Size = (1.000000000000000,-1.000000000000000)"

[46] "Metadata:"

[47] " AREA_OR_POINT=Area"

[48] "Image Structure Metadata:"

[49] " COMPRESSION=LZW"

[50] " INTERLEAVE=BAND"

[51] "Corner Coordinates:"

[52] "Upper Left ( 731453.000, 4713838.000) ( 72d10'52.71\"W, 42d32'32.18\"N)"

[53] "Lower Left ( 731453.000, 4712471.000) ( 72d10'54.71\"W, 42d31'47.92\"N)"

[54] "Upper Right ( 733150.000, 4713838.000) ( 72d 9'38.40\"W, 42d32'30.35\"N)"

[55] "Lower Right ( 733150.000, 4712471.000) ( 72d 9'40.41\"W, 42d31'46.08\"N)"

[56] "Center ( 732301.500, 4713154.500) ( 72d10'16.56\"W, 42d32' 9.13\"N)"

[57] "Band 1 Block=1697x1 Type=Float64, ColorInterp=Gray"

[58] " Min=305.070 Max=416.070 "

[59] " Minimum=305.070, Maximum=416.070, Mean=359.853, StdDev=17.832"

[60] " NoData Value=-9999"

[61] " Metadata:"

[62] " STATISTICS_MAXIMUM=416.06997680664"

[63] " STATISTICS_MEAN=359.85311802914"

[64] " STATISTICS_MINIMUM=305.07000732422"

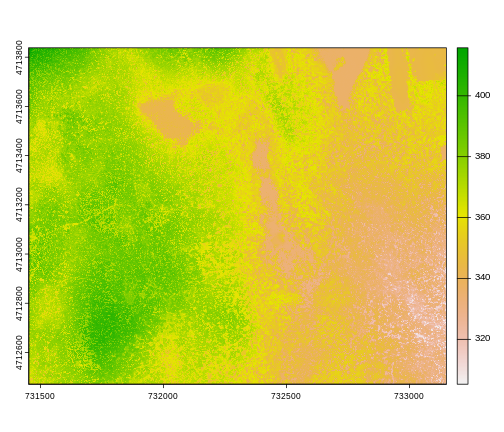

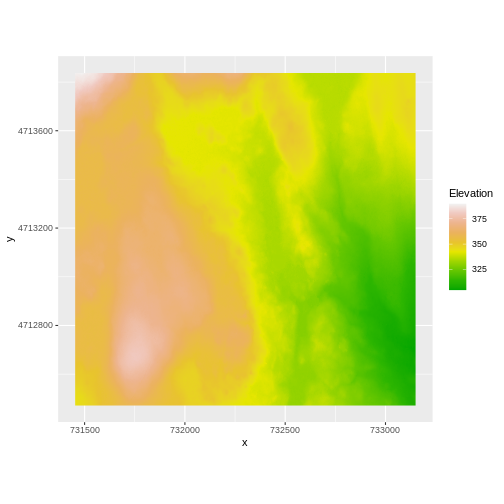

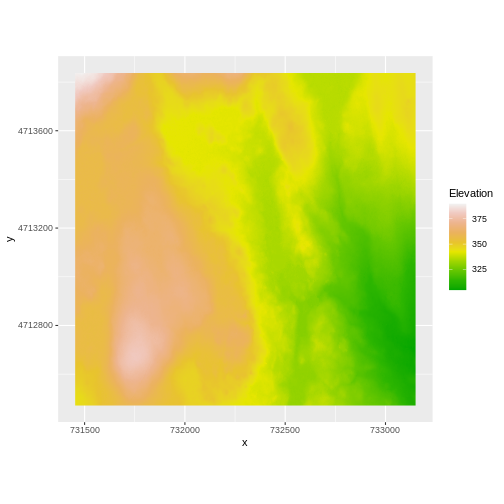

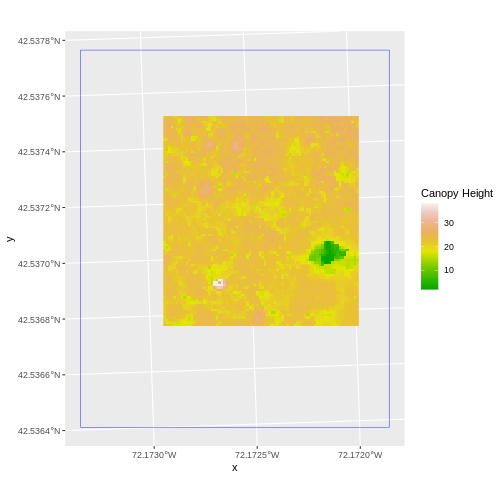

[65] " STATISTICS_STDDEV=17.83169335933" We’ve already loaded and worked with these two data files in earlier episodes. Let’s plot them each once more to remind ourselves what this data looks like. First we’ll plot the DTM elevation data:

R

ggplot() +

geom_raster(data = DTM_HARV_df ,

aes(x = x, y = y, fill = HARV_dtmCrop)) +

scale_fill_gradientn(name = "Elevation", colors = terrain.colors(10)) +

coord_quickmap()

And then the DSM elevation data:

R

ggplot() +

geom_raster(data = DSM_HARV_df ,

aes(x = x, y = y, fill = HARV_dsmCrop)) +

scale_fill_gradientn(name = "Elevation", colors = terrain.colors(10)) +

coord_quickmap()

Two Ways to Perform Raster Calculations

We can calculate the difference between two rasters in two different ways:

- by directly subtracting the two rasters in R using raster math

or for more efficient processing - particularly if our rasters are large and/or the calculations we are performing are complex:

- using the

lapp()function.

Raster Math & Canopy Height Models

We can perform raster calculations by subtracting (or adding, multiplying, etc) two rasters. In the geospatial world, we call this “raster math”.

Let’s subtract the DTM from the DSM to create a Canopy Height Model.

After subtracting, let’s create a dataframe so we can plot with

ggplot.

R

CHM_HARV <- DSM_HARV - DTM_HARV

CHM_HARV_df <- as.data.frame(CHM_HARV, xy = TRUE)

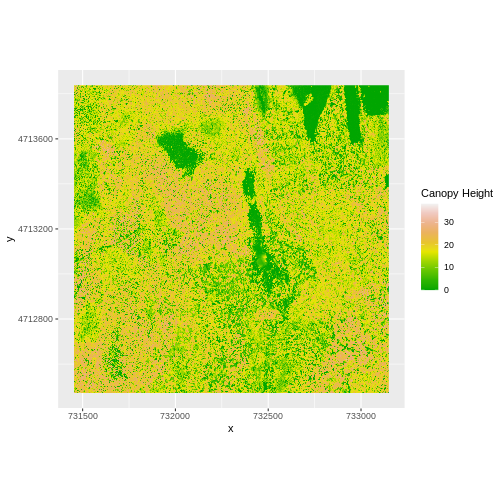

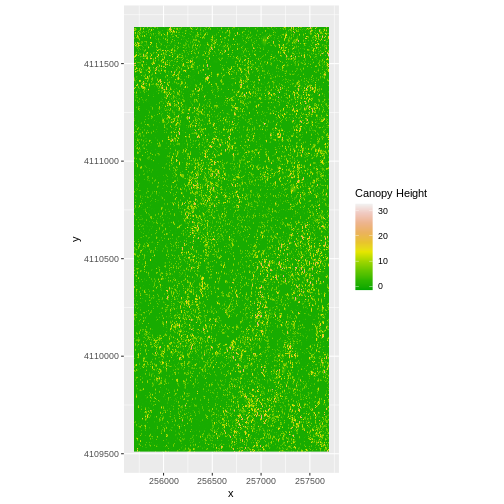

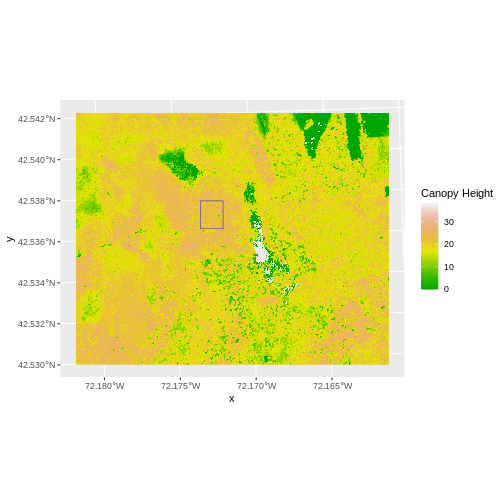

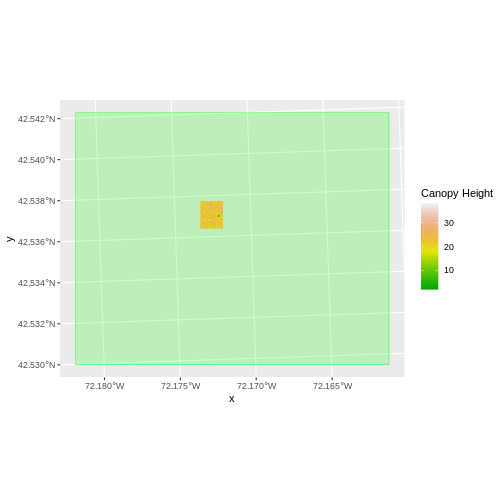

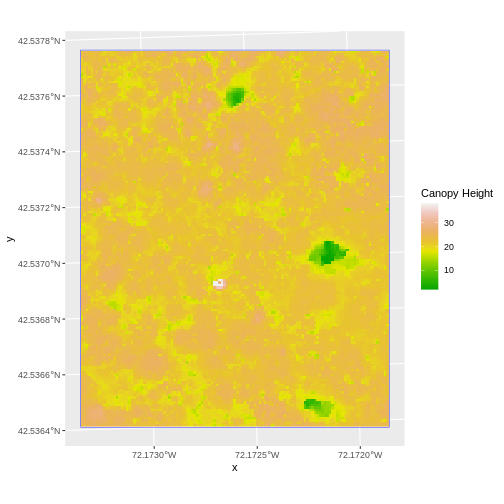

We can now plot the output CHM.

R

ggplot() +

geom_raster(data = CHM_HARV_df ,

aes(x = x, y = y, fill = HARV_dsmCrop)) +

scale_fill_gradientn(name = "Canopy Height", colors = terrain.colors(10)) +

coord_quickmap()

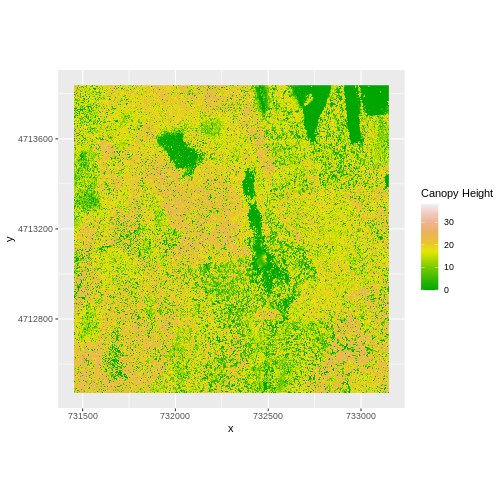

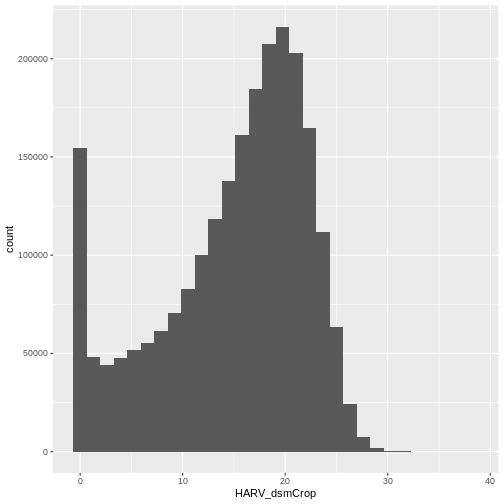

Let’s have a look at the distribution of values in our newly created Canopy Height Model (CHM).

R

ggplot(CHM_HARV_df) +

geom_histogram(aes(HARV_dsmCrop))

OUTPUT

`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

Notice that the range of values for the output CHM is between 0 and 30 meters. Does this make sense for trees in Harvard Forest?

Challenge: Explore CHM Raster Values

It’s often a good idea to explore the range of values in a raster dataset just like we might explore a dataset that we collected in the field.

- What is the min and maximum value for the Harvard Forest Canopy

Height Model (

CHM_HARV) that we just created? - What are two ways you can check this range of data for

CHM_HARV? - What is the distribution of all the pixel values in the CHM?

- Plot a histogram with 6 bins instead of the default and change the color of the histogram.

- Plot the

CHM_HARVraster using breaks that make sense for the data. Include an appropriate color palette for the data, plot title and no axes ticks / labels.

- There are missing values in our data, so we need to specify

na.rm = TRUE.

R

min(CHM_HARV_df$HARV_dsmCrop, na.rm = TRUE)

OUTPUT

[1] 0R

max(CHM_HARV_df$HARV_dsmCrop, na.rm = TRUE)

OUTPUT

[1] 38.16998- Possible ways include:

- Create a histogram

- Use the

min(),max(), andrange()functions. - Print the object and look at the

valuesattribute.

R

ggplot(CHM_HARV_df) +

geom_histogram(aes(HARV_dsmCrop))

OUTPUT

`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

R

ggplot(CHM_HARV_df) +

geom_histogram(aes(HARV_dsmCrop), colour="black",

fill="darkgreen", bins = 6)

R

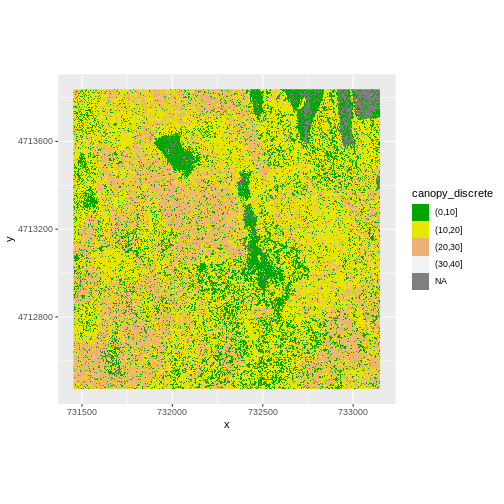

custom_bins <- c(0, 10, 20, 30, 40)

CHM_HARV_df <- CHM_HARV_df %>%

mutate(canopy_discrete = cut(HARV_dsmCrop,

breaks = custom_bins))

ggplot() +

geom_raster(data = CHM_HARV_df , aes(x = x, y = y,

fill = canopy_discrete)) +

scale_fill_manual(values = terrain.colors(4)) +

coord_quickmap()

Efficient Raster Calculations

Raster math, like we just did, is an appropriate approach to raster calculations if:

- The rasters we are using are small in size.

- The calculations we are performing are simple.

However, raster math is a less efficient approach as computation becomes more complex or as file sizes become large.

The lapp() function takes two or more rasters and

applies a function to them using efficient processing methods. The

syntax is

outputRaster <- lapp(x, fun=functionName)

In which raster can be either a SpatRaster or a SpatRasterDataset

which is an object that holds rasters. See help(sds).

Data Tip

To create a SpatRasterDataset, we call the function sds

which can take a list of raster objects (each one created by calling

rast).

Let’s perform the same subtraction calculation that we calculated

above using raster math, using the lapp() function.

Data Tip

A custom function consists of a defined set of commands performed on

a input object. Custom functions are particularly useful for tasks that

need to be repeated over and over in the code. A simplified syntax for

writing a custom function in R is:

function_name <- function(variable1, variable2) { WhatYouWantDone, WhatToReturn}

R

CHM_ov_HARV <- lapp(sds(list(DSM_HARV, DTM_HARV)),

fun = function(r1, r2) { return( r1 - r2) })

Next we need to convert our new object to a data frame for plotting

with ggplot.

R

CHM_ov_HARV_df <- as.data.frame(CHM_ov_HARV, xy = TRUE)

Now we can plot the CHM:

R

ggplot() +

geom_raster(data = CHM_ov_HARV_df,

aes(x = x, y = y, fill = HARV_dsmCrop)) +

scale_fill_gradientn(name = "Canopy Height", colors = terrain.colors(10)) +

coord_quickmap()

How do the plots of the CHM created with manual raster math and the

lapp() function compare?

Export a GeoTIFF

Now that we’ve created a new raster, let’s export the data as a

GeoTIFF file using the writeRaster() function.

When we write this raster object to a GeoTIFF file we’ll name it

CHM_HARV.tiff. This name allows us to quickly remember both

what the data contains (CHM data) and for where (HARVard Forest). The

writeRaster() function by default writes the output file to

your working directory unless you specify a full file path.

We will specify the output format (“GTiff”), the no data value

NAflag = -9999. We will also tell R to overwrite any data

that is already in a file of the same name.

R

writeRaster(CHM_ov_HARV, "CHM_HARV.tiff",

filetype="GTiff",

overwrite=TRUE,

NAflag=-9999)

writeRaster() Options

The function arguments that we used above include:

-

filetype: specify that the format will be

GTiffor GeoTIFF. - overwrite: If TRUE, R will overwrite any existing file with the same name in the specified directory. USE THIS SETTING WITH CAUTION!

-

NAflag: set the GeoTIFF tag for

NoDataValueto -9999, the National Ecological Observatory Network’s (NEON) standardNoDataValue.

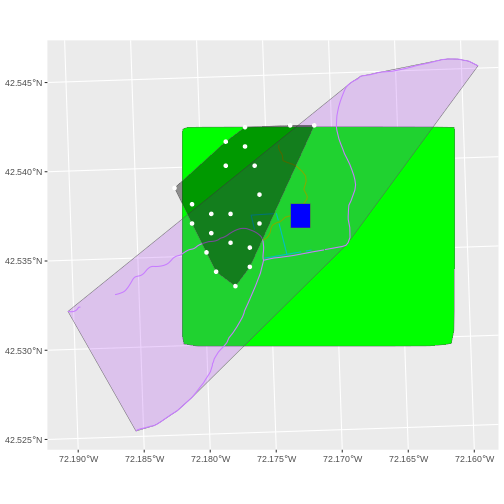

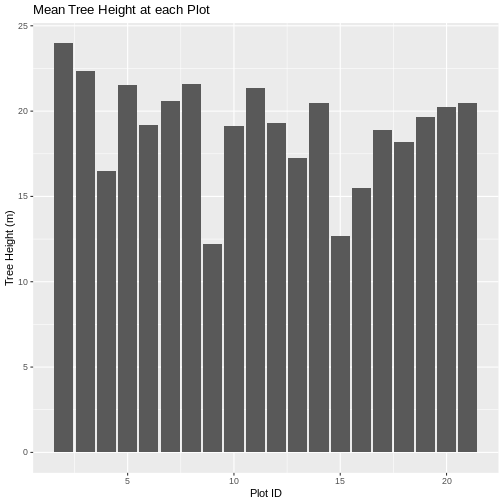

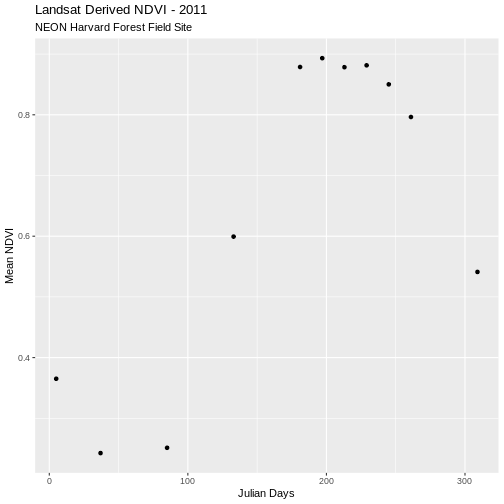

Challenge: Explore the NEON San Joaquin Experimental Range Field Site

Data are often more interesting and powerful when we compare them across various locations. Let’s compare some data collected over Harvard Forest to data collected in Southern California. The NEON San Joaquin Experimental Range (SJER) field site located in Southern California has a very different ecosystem and climate than the NEON Harvard Forest Field Site in Massachusetts.

Import the SJER DSM and DTM raster files and create a Canopy Height

Model. Then compare the two sites. Be sure to name your R objects and

outputs carefully, as follows: objectType_SJER

(e.g. DSM_SJER). This will help you keep track of data from

different sites!

- You should have the DSM and DTM data for the SJER site already loaded from the Plot Raster Data in R episode.) Don’t forget to check the CRSs and units of the data.

- Create a CHM from the two raster layers and check to make sure the data are what you expect.

- Plot the CHM from SJER.

- Export the SJER CHM as a GeoTIFF.

- Compare the vegetation structure of the Harvard Forest and San Joaquin Experimental Range.

- Use the

lapp()function to subtract the two rasters & create the CHM.

R

CHM_ov_SJER <- lapp(sds(list(DSM_SJER, DTM_SJER)),

fun = function(r1, r2){ return(r1 - r2) })

Convert the output to a dataframe:

R

CHM_ov_SJER_df <- as.data.frame(CHM_ov_SJER, xy = TRUE)

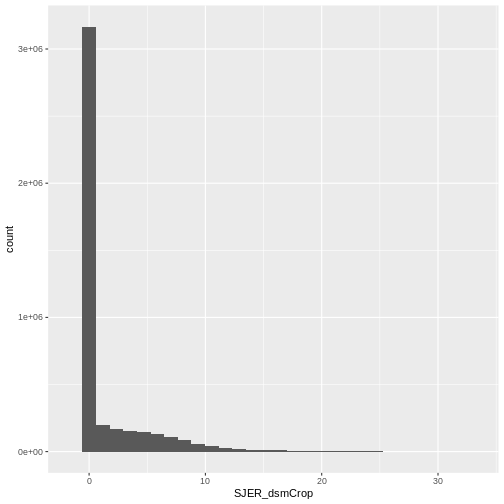

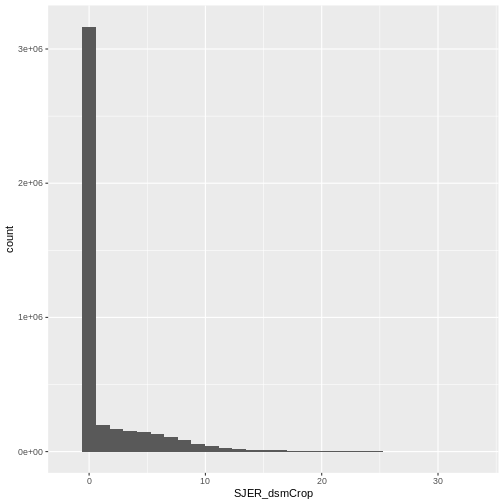

Create a histogram to check that the data distribution makes sense:

R

ggplot(CHM_ov_SJER_df) +

geom_histogram(aes(SJER_dsmCrop))

OUTPUT

`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

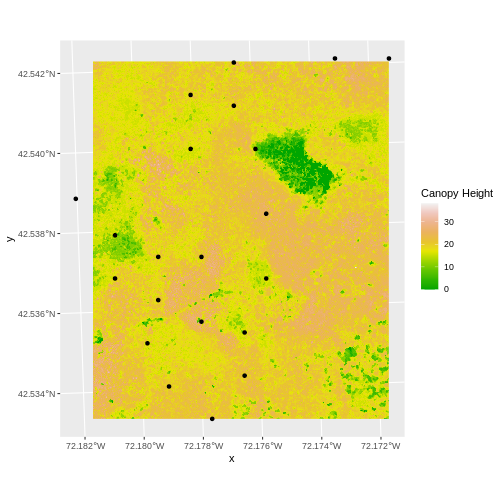

- Create a plot of the CHM:

R

ggplot() +

geom_raster(data = CHM_ov_SJER_df,

aes(x = x, y = y,

fill = SJER_dsmCrop)

) +

scale_fill_gradientn(name = "Canopy Height",

colors = terrain.colors(10)) +

coord_quickmap()

- Export the CHM object to a file:

R

writeRaster(CHM_ov_SJER, "chm_ov_SJER.tiff",

filetype = "GTiff",

overwrite = TRUE,

NAflag = -9999)

- Compare the SJER and HARV CHMs. Tree heights are much shorter in SJER. You can confirm this by looking at the histograms of the two CHMs.

R

ggplot(CHM_HARV_df) +

geom_histogram(aes(HARV_dsmCrop))

OUTPUT

`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

R

ggplot(CHM_ov_SJER_df) +

geom_histogram(aes(SJER_dsmCrop))

OUTPUT

`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

Key Points

- Rasters can be computed on using mathematical functions.

- The

lapp()function provides an efficient way to do raster math. - The

writeRaster()function can be used to write raster data to a file.

Content from Work with Multi-Band Rasters

Last updated on 2024-10-15 | Edit this page

Estimated time: 60 minutes

Overview

Questions

- How can I visualize individual and multiple bands in a raster object?

Objectives

- Identify a single vs. a multi-band raster file.

- Import multi-band rasters into R using the

terrapackage. - Plot multi-band color image rasters in R using the

ggplotpackage.

Things You’ll Need To Complete This Episode

See the lesson homepage for detailed information about the software, data, and other prerequisites you will need to work through the examples in this episode.

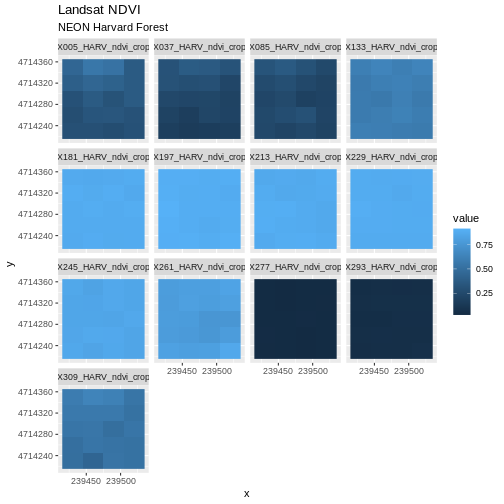

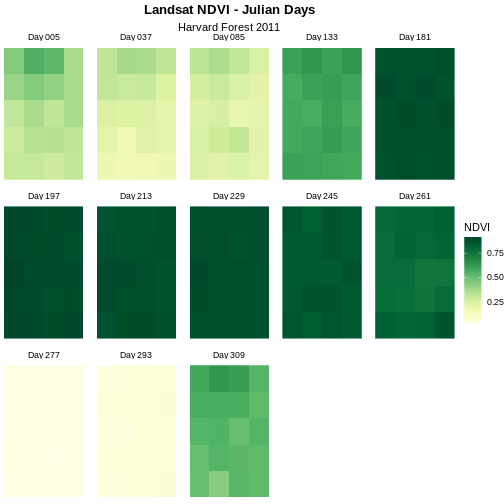

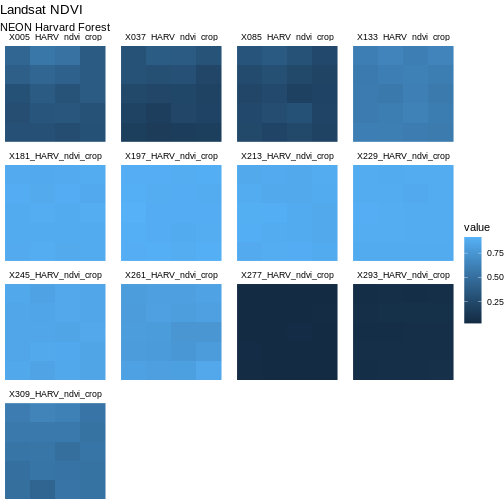

We introduced multi-band raster data in an earlier lesson. This episode explores how to import and plot a multi-band raster in R.

Getting Started with Multi-Band Data in R

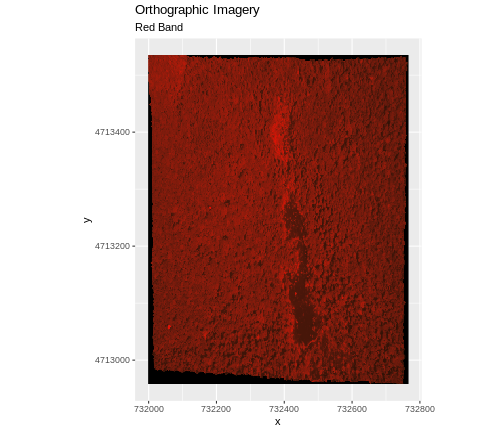

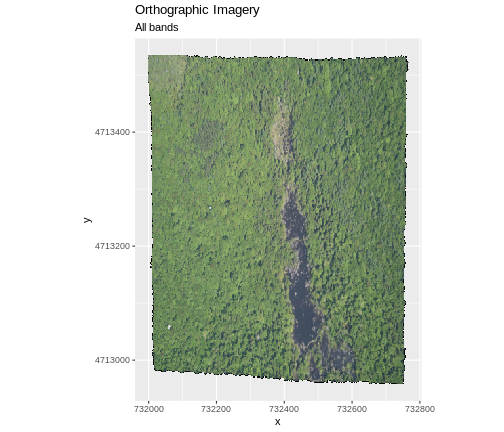

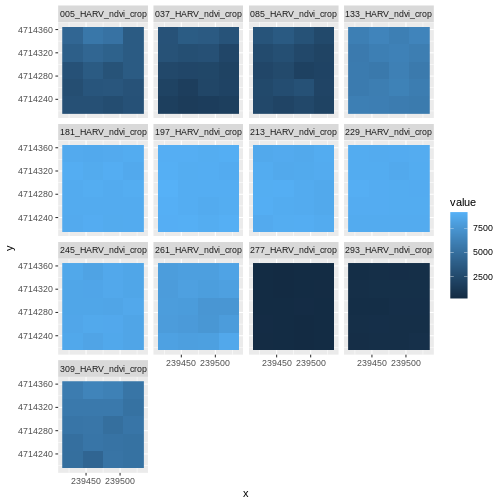

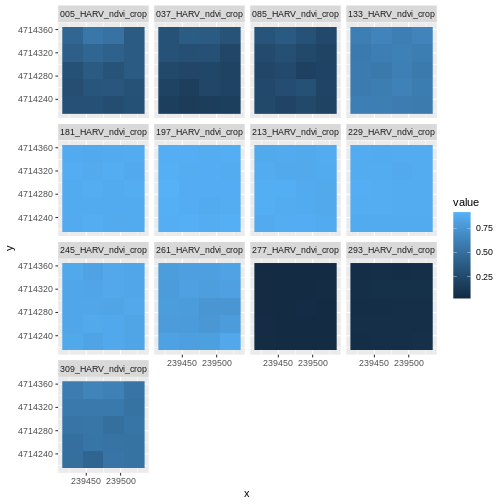

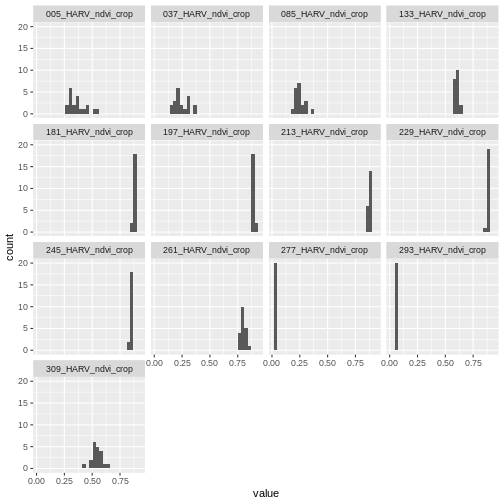

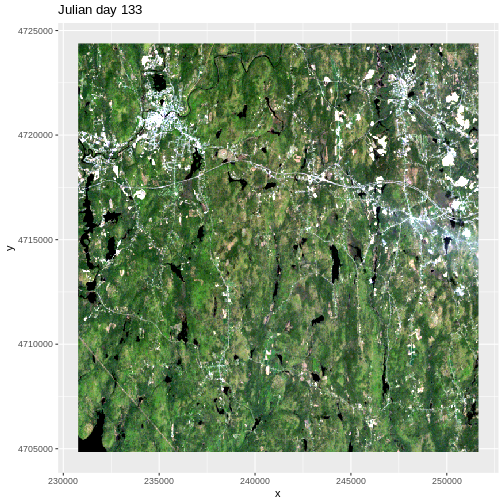

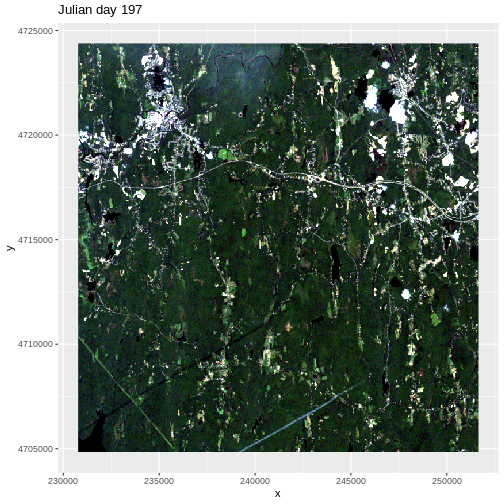

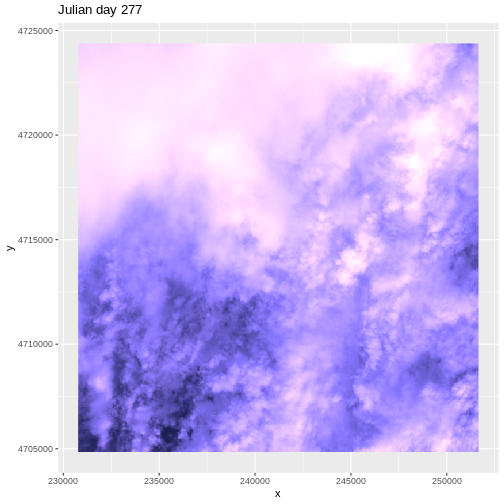

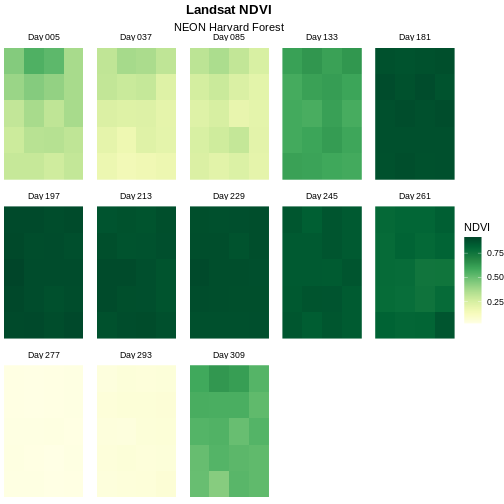

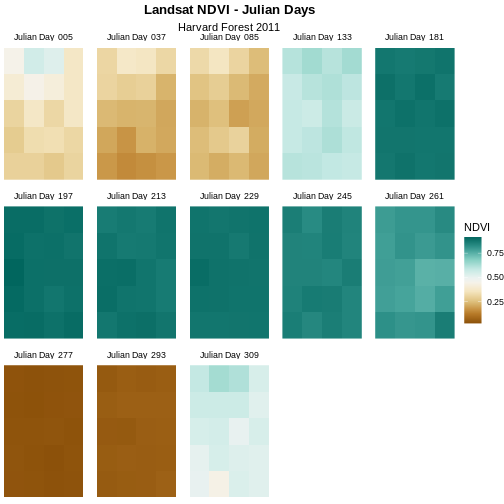

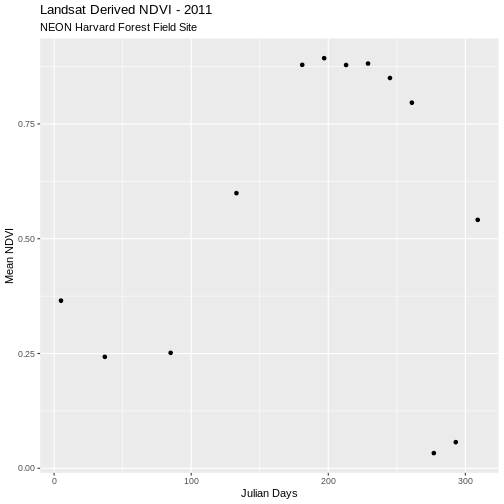

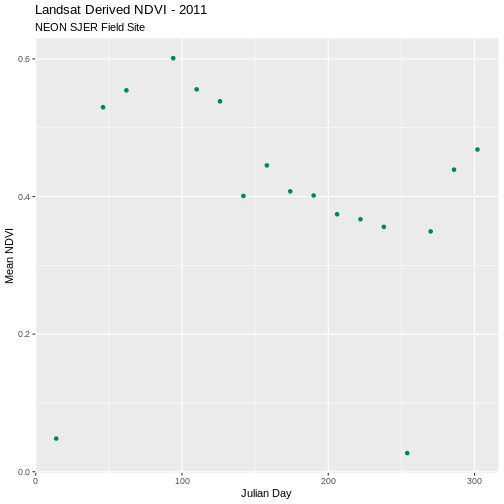

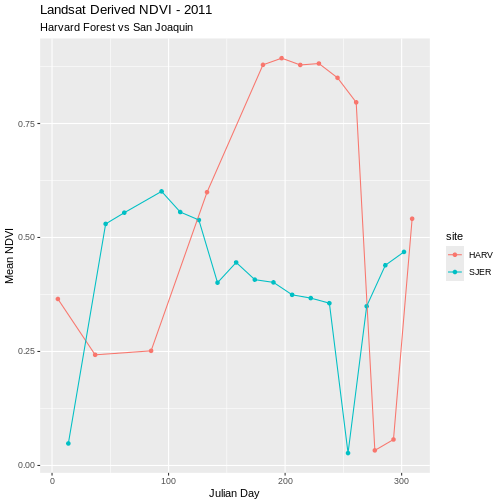

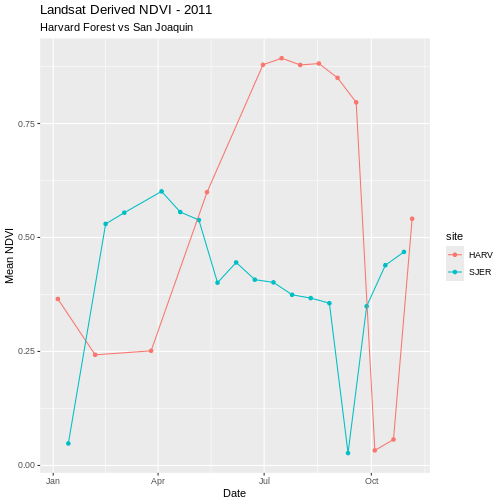

In this episode, the multi-band data that we are working with is imagery collected using the NEON Airborne Observation Platform high resolution camera over the NEON Harvard Forest field site. Each RGB image is a 3-band raster. The same steps would apply to working with a multi-spectral image with 4 or more bands - like Landsat imagery.

By using the rast() function along with the

lyrs parameter, we can read specific raster bands (i.e. the

first one); omitting this parameter would read instead all bands.

R

RGB_band1_HARV <-

rast("data/NEON-DS-Airborne-Remote-Sensing/HARV/RGB_Imagery/HARV_RGB_Ortho.tif",

lyrs = 1)

We need to convert this data to a data frame in order to plot it with

ggplot.

R

RGB_band1_HARV_df <- as.data.frame(RGB_band1_HARV, xy = TRUE)

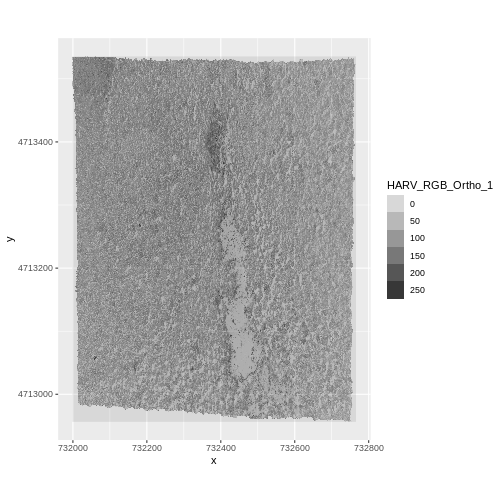

R

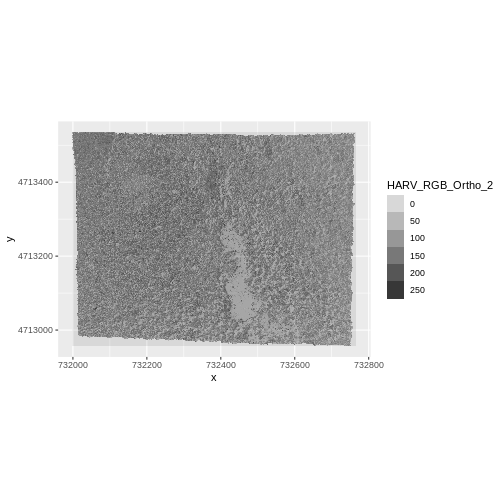

ggplot() +

geom_raster(data = RGB_band1_HARV_df,

aes(x = x, y = y, alpha = HARV_RGB_Ortho_1)) +

coord_quickmap()

Challenge

View the attributes of this band. What are its dimensions, CRS, resolution, min and max values, and band number?

R

RGB_band1_HARV

OUTPUT

class : SpatRaster

dimensions : 2317, 3073, 1 (nrow, ncol, nlyr)

resolution : 0.25, 0.25 (x, y)

extent : 731998.5, 732766.8, 4712956, 4713536 (xmin, xmax, ymin, ymax)

coord. ref. : WGS 84 / UTM zone 18N (EPSG:32618)

source : HARV_RGB_Ortho.tif

name : HARV_RGB_Ortho_1

min value : 0

max value : 255 Notice that when we look at the attributes of this band, we see:

dimensions : 2317, 3073, 1 (nrow, ncol, nlyr)

This is R telling us that we read only one its bands.

Data Tip

The number of bands associated with a raster’s file can also be

determined using the describe() function: syntax is

describe(sources(RGB_band1_HARV)).

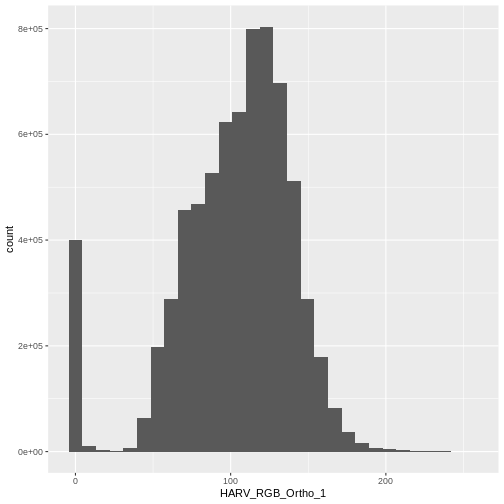

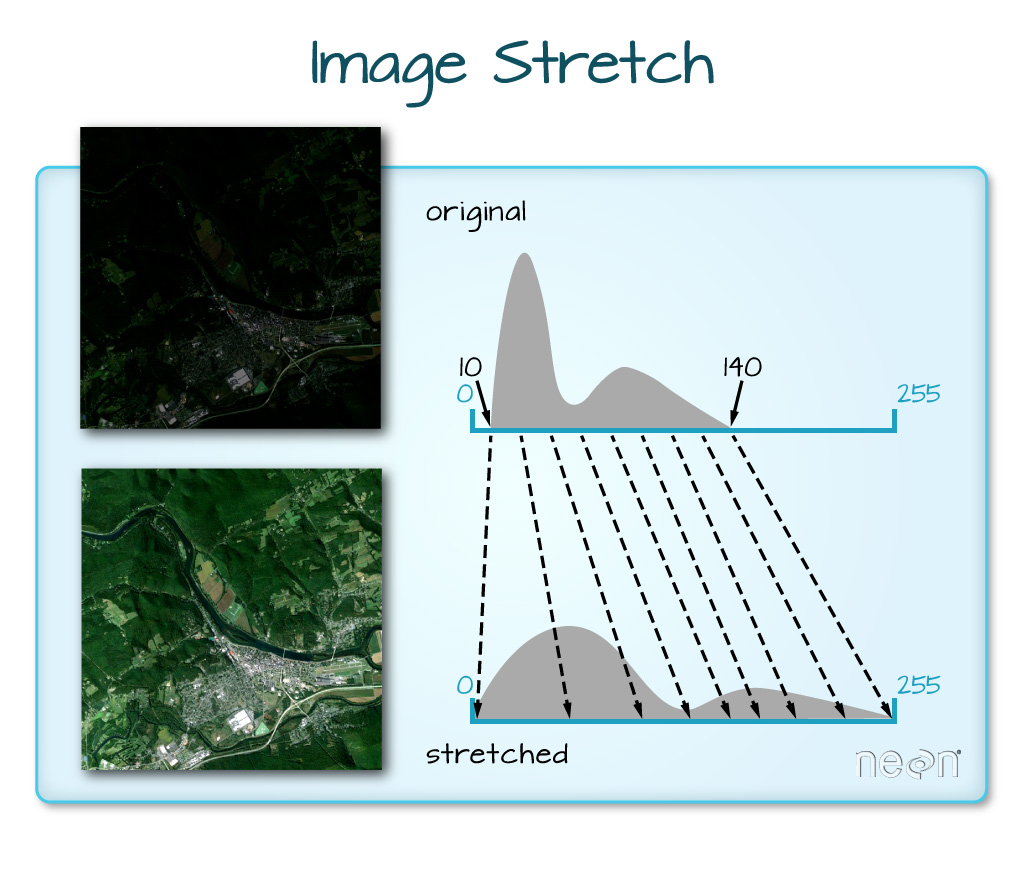

Image Raster Data Values

As we saw in the previous exercise, this raster contains values between 0 and 255. These values represent degrees of brightness associated with the image band. In the case of a RGB image (red, green and blue), band 1 is the red band. When we plot the red band, larger numbers (towards 255) represent pixels with more red in them (a strong red reflection). Smaller numbers (towards 0) represent pixels with less red in them (less red was reflected). To plot an RGB image, we mix red + green + blue values into one single color to create a full color image - similar to the color image a digital camera creates.

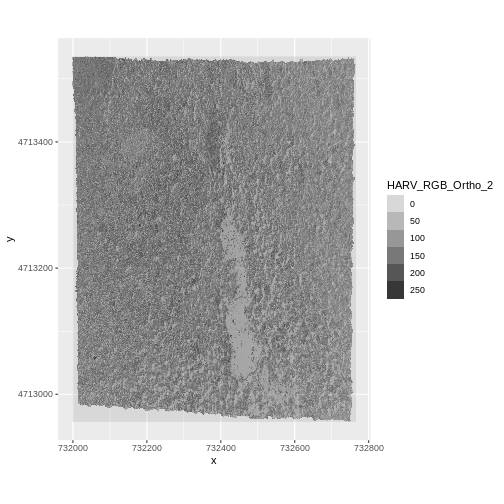

Import A Specific Band

We can use the rast() function to import specific bands

in our raster object by specifying which band we want with

lyrs = N (N represents the band number we want to work

with). To import the green band, we would use lyrs = 2.

R

RGB_band2_HARV <-

rast("data/NEON-DS-Airborne-Remote-Sensing/HARV/RGB_Imagery/HARV_RGB_Ortho.tif",

lyrs = 2)

We can convert this data to a data frame and plot the same way we plotted the red band:

R

RGB_band2_HARV_df <- as.data.frame(RGB_band2_HARV, xy = TRUE)

R

ggplot() +

geom_raster(data = RGB_band2_HARV_df,

aes(x = x, y = y, alpha = HARV_RGB_Ortho_2)) +

coord_equal()

Challenge: Making Sense of Single Band Images

Compare the plots of band 1 (red) and band 2 (green). Is the forested area darker or lighter in band 2 (the green band) compared to band 1 (the red band)?

We’d expect a brighter value for the forest in band 2 (green) than in band 1 (red) because the leaves on trees of most often appear “green” - healthy leaves reflect MORE green light than red light.

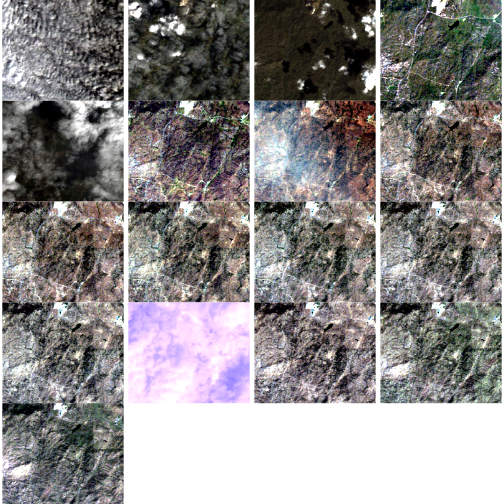

Raster Stacks in R

Next, we will work with all three image bands (red, green and blue) as an R raster object. We will then plot a 3-band composite, or full color, image.

To bring in all bands of a multi-band raster, we use

therast() function.

R

RGB_stack_HARV <-

rast("data/NEON-DS-Airborne-Remote-Sensing/HARV/RGB_Imagery/HARV_RGB_Ortho.tif")

Let’s preview the attributes of our stack object:

R

RGB_stack_HARV

OUTPUT

class : SpatRaster

dimensions : 2317, 3073, 3 (nrow, ncol, nlyr)

resolution : 0.25, 0.25 (x, y)

extent : 731998.5, 732766.8, 4712956, 4713536 (xmin, xmax, ymin, ymax)

coord. ref. : WGS 84 / UTM zone 18N (EPSG:32618)

source : HARV_RGB_Ortho.tif

names : HARV_RGB_Ortho_1, HARV_RGB_Ortho_2, HARV_RGB_Ortho_3

min values : 0, 0, 0

max values : 255, 255, 255 We can view the attributes of each band in the stack in a single output. For example, if we had hundreds of bands, we could specify which band we’d like to view attributes for using an index value:

R

RGB_stack_HARV[[2]]

OUTPUT

class : SpatRaster

dimensions : 2317, 3073, 1 (nrow, ncol, nlyr)

resolution : 0.25, 0.25 (x, y)

extent : 731998.5, 732766.8, 4712956, 4713536 (xmin, xmax, ymin, ymax)

coord. ref. : WGS 84 / UTM zone 18N (EPSG:32618)

source : HARV_RGB_Ortho.tif

name : HARV_RGB_Ortho_2

min value : 0

max value : 255 We can also use the ggplot functions to plot the data in

any layer of our raster object. Remember, we need to convert to a data

frame first.

R

RGB_stack_HARV_df <- as.data.frame(RGB_stack_HARV, xy = TRUE)

Each band in our RasterStack gets its own column in the data frame. Thus we have:

R

str(RGB_stack_HARV_df)

OUTPUT

'data.frame': 7120141 obs. of 5 variables:

$ x : num 731999 731999 731999 731999 732000 ...